Mental phenomena, all conscious and unconscious mental phenomena, visual or auditory experiences, experiences of pain, tickles, itching, thoughts, certainly the entirety of our mental life, result from the processes that take place in our brains (Searle, 1995)

General structure of visual pathway

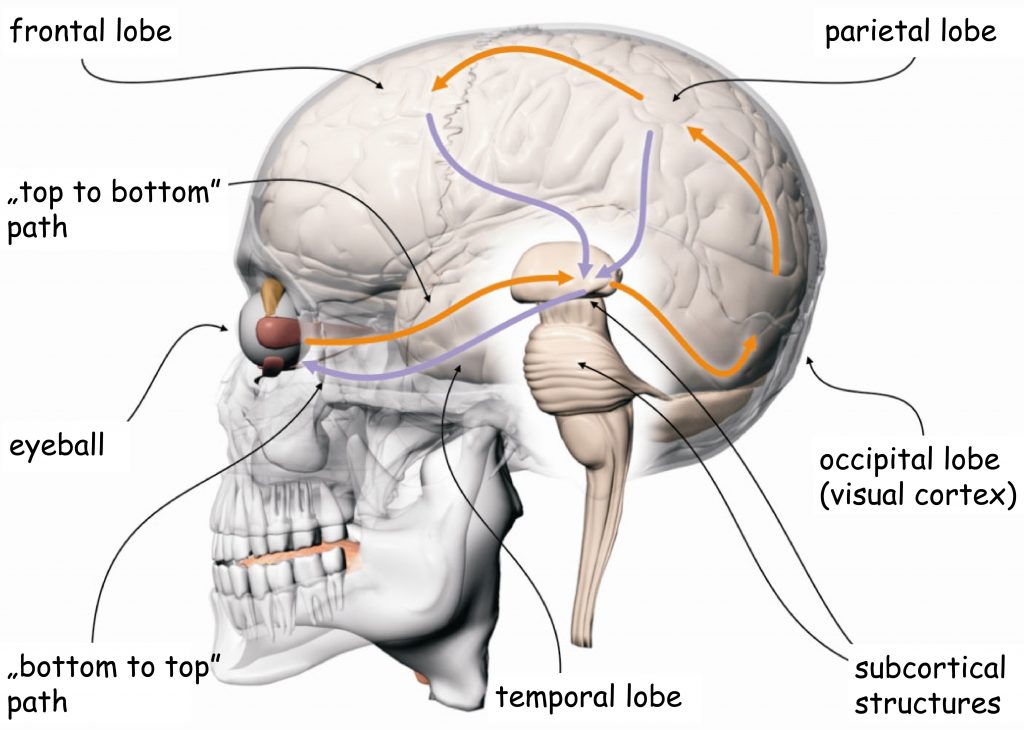

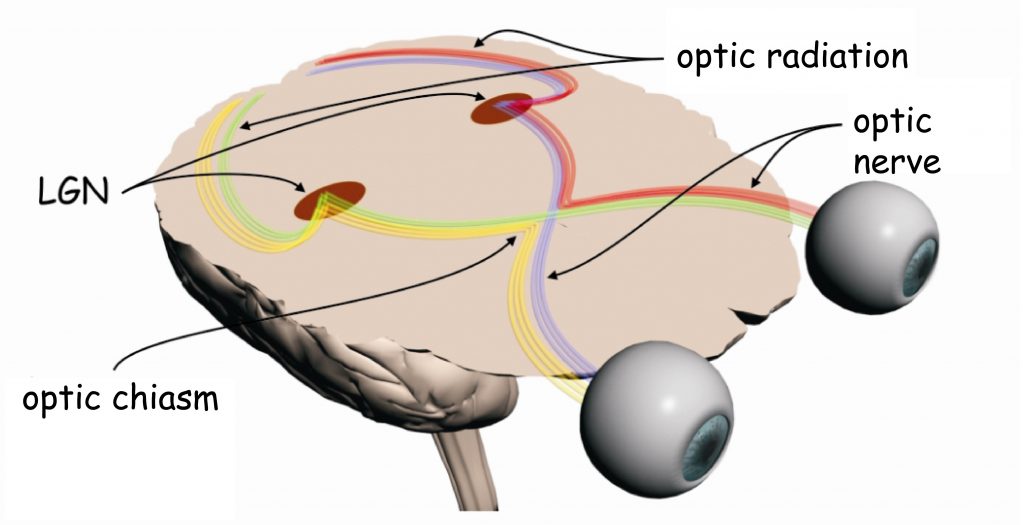

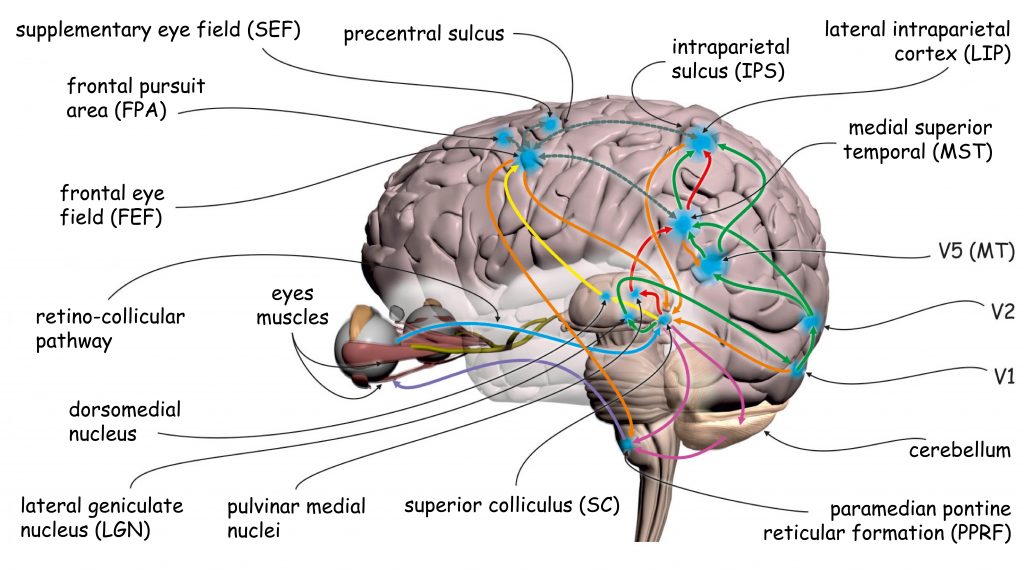

Brain processes underlying the experience of seeing are conducted by neural networks, which form the so-called visual pathway. It is a functionally and anatomically complex biological structure which, to put it very simply, consists of three modules. The first module is eyes, more precisely their optical systems and retinas. The second module consists of all subcortical structures located between the eyes and the cerebral cortex, particularly in the occipital lobe. And the third module — most complicated and least known, yet, as it seems, most important for seeing — consists of interconnected cortical areas in various brain lobes (Fig. 4).

The division of the visual pathway into three parts results from the roughly described functions performed by the structures located in each of them. The first module — the eyes — is responsible for recording the light and the initial organization of the sensory data. In the second module, these data are ordered and categorized. Both these parts form the so-called early stage of the visual pathway. In the third module, most complicated in terms of function and structure, the sensory data are analyzed, and then integrated and synthesized. The cooperating brain structures which form this block are referred to as higher or late stages of sensory data processing. The final effect of the work of all these modules is the subjective experience of seeing.

Top-down and bottom-up processes

The direction of the flow of neural impulses in the visual pathway was depicted in Fig. 4 with arrows. The orange arrows, which indicate the order from the eyes through the subcortical structures into the cerebral cortex, represent the so-called bottom-up processes of sensory data processing. Following this direction of the sensory data flow, the lobes of the cerebral cortex receive data on the distribution of light reaching the eyes of the observer within the scope in which the photoreceptors record them, and the neurons connected to the photoreceptors — forward them. It means that if a part of a visual scene is, for example, brighter than another, the receptors which are more intensively illuminated will react more strongly, proportionately to its brightness. Such information will be sent to the top, towards the center, namely towards various parts of the cerebral cortex.

It could seem that when we are talking about visual perception, the bottom-top direction of sensory data processing is the only possible way of getting to know the world with our vision. The eyes, like a camera, record the light, and the brain interprets its distribution, creating the content of the subjective experience of seeing. As a result, the observer knows what is in front of their eyes—nothing further from the truth. The everyday experience provides thousands of examples that contradict the principle of mechanical video recording using eye-cameras. It does not mean, however, that there are no similarities between the eyes and the automated means of image recording. But there is one fundamental difference — cameras do not think about the world they record. All they can do is indicated that there is, for example, a human face in the frame. They have no idea, however, to whom it belongs and what relationship we have with its owner.

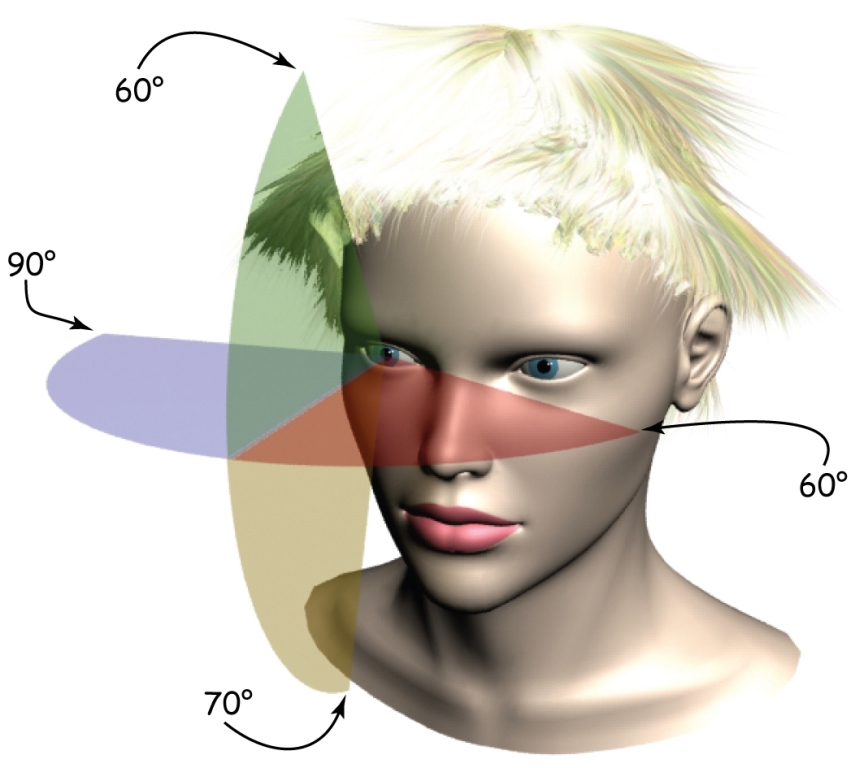

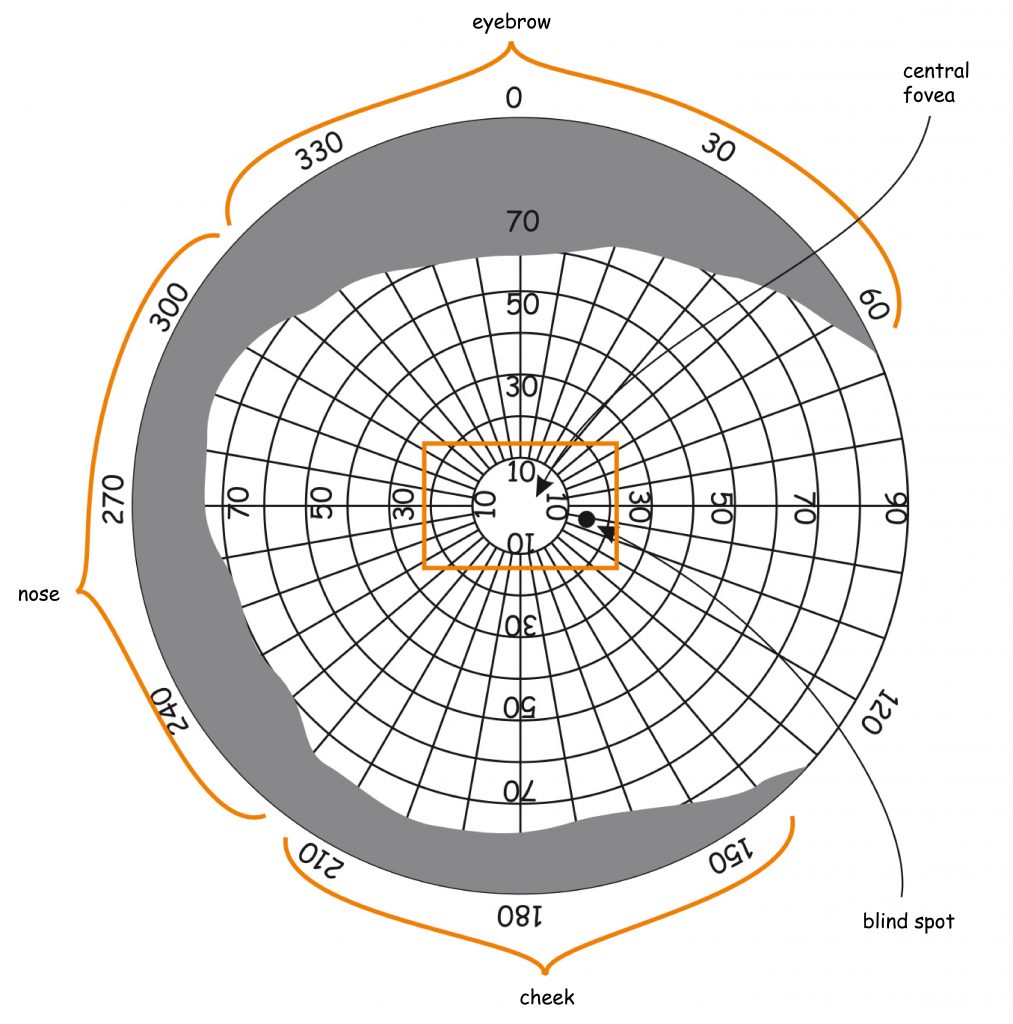

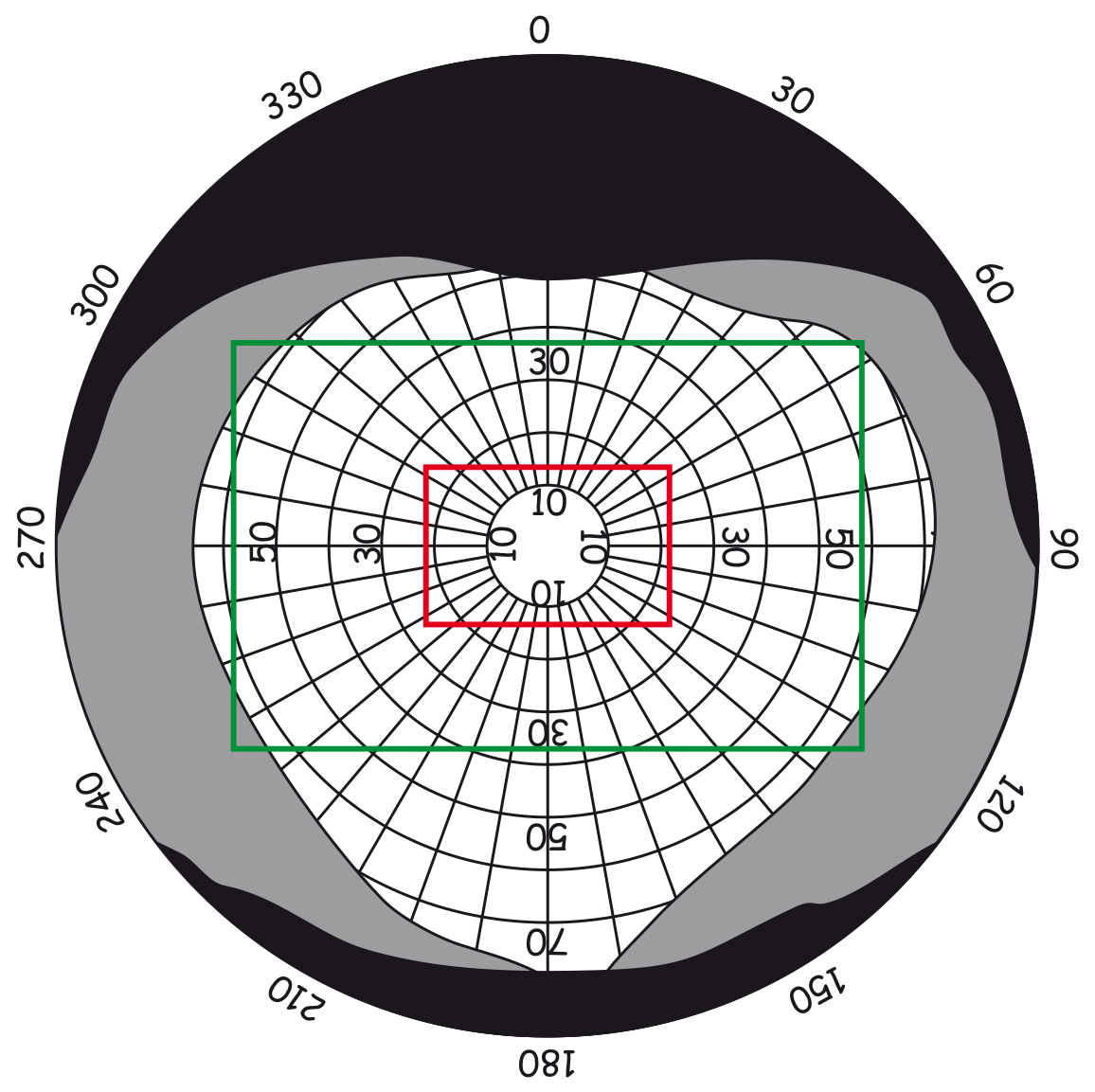

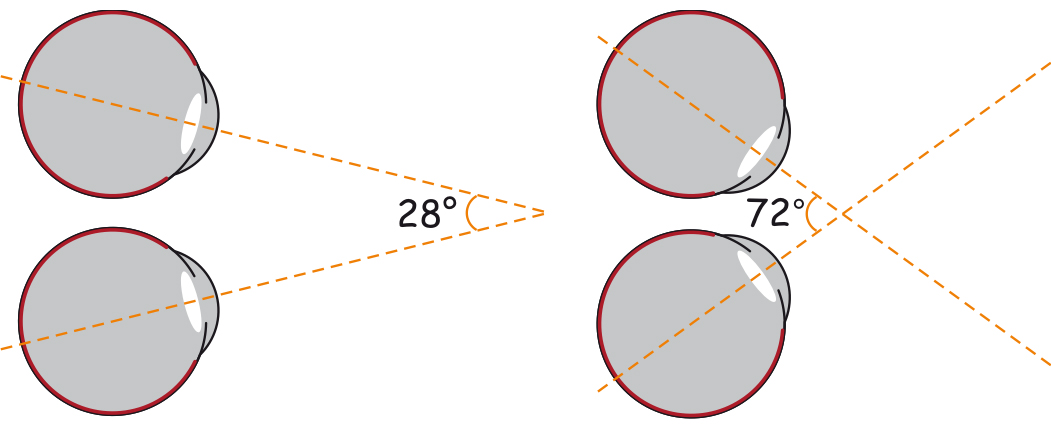

Even though the scope of human binocular vision in the horizontal plane is approximately 180°, and in the vertical plane — about 130°, we do not perceive all objects in the area with the same clarity. Moreover, we may not notice the presence of some objects at all if they are atypical or insignificant from the perspective of the task being carried at the moment. We may, for example, overlook a gorilla, strolling the basketball court between the players passing the ball to each other, when we concentrate on counting the passes (Simons and Chabis, 1999). These properties of the visual systems were intuitively used by the masters of cinematography, first and foremost, Alfred Hitchcock.

We can also erroneously presume the presence of some objects in a visual scene only because we have frequently seen them in similar situations. We can, for example, be sure that we have seen a light switch on the wall next to the door, even though it was not there. To put it briefly, the brain actively processes the sensory data and assesses their usefulness in terms of the currently performed task. This process may as well result in ignoring quite large groups of sensory data or, on the contrary, stimulating the muscles coordinating the eyeball movement to relocate vision from one part of a scene to another to obtain new data. All these processes are generally called top-down processes. In Fig. 4, the direction was marked with purple arrows pointing downwards.

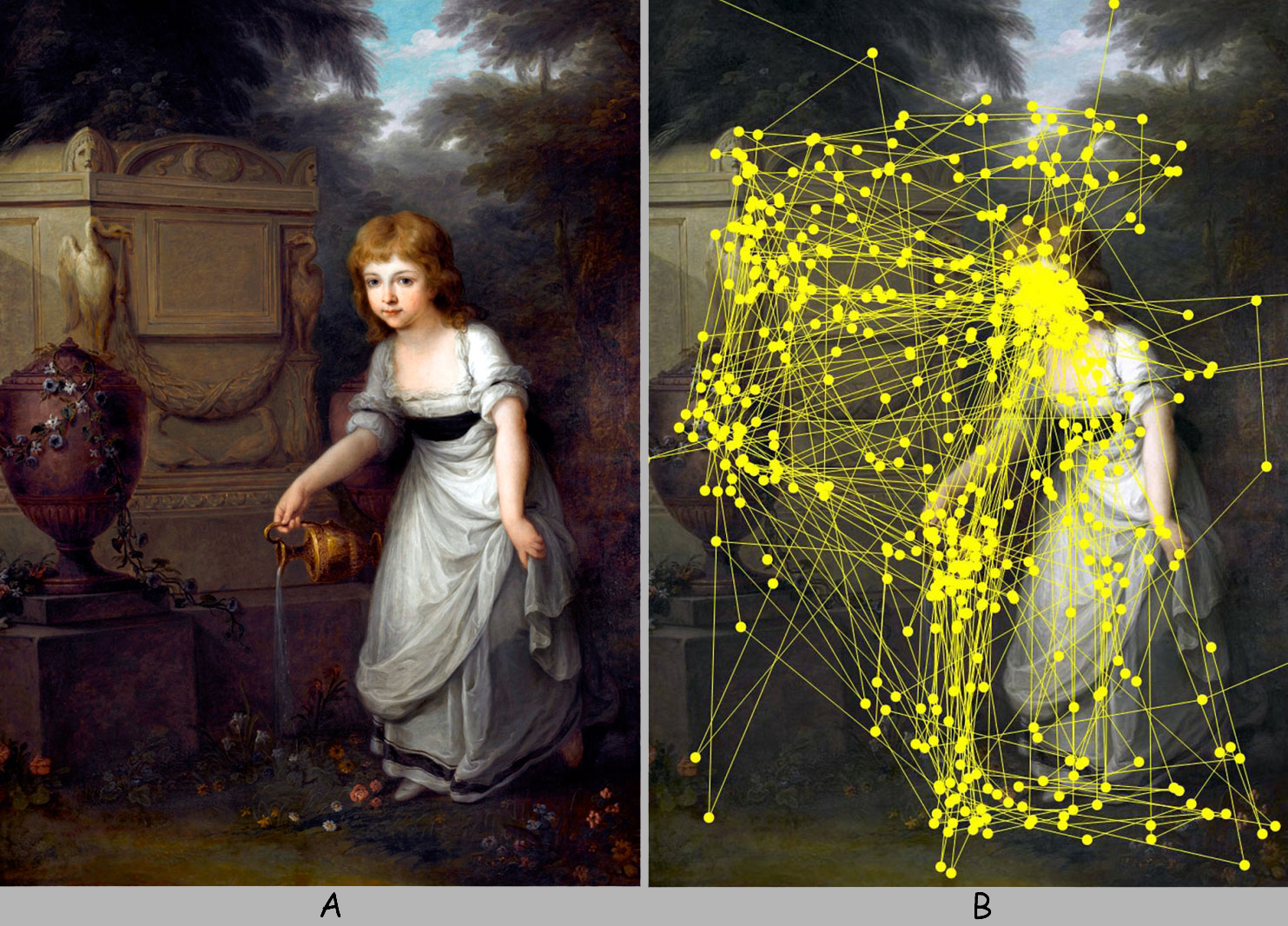

The top-down processes manage sensory data processing, meaning that they filter the data based on the type of the currently performed task, the intention, the need, the attitude, the beliefs, knowledge, or expectations of an observer. Their results affect the movement of eyeballs, direct the visual axes on those elements of the painting that require more in-depth analysis. Comprehensive experimental studies regarding the role of attitude understood as generalized preparedness to a specific form of response, also in terms of visual perception, were carried out as early as in the 1960s by Dimitri Uznadze’s students as a part of the so-called Georgian school of psychology (Bżaława, 1970; Prangiszwili, 1969; Uznadze, 1966).

In general, the subjective visual experience is a result of sensory data processing via processes which organize data from bottom to top and “push” them towards higher levels of the brain and top-down processes that filter and modify these data depending on current needs, beliefs, or knowledge of the observer as well as have a top-down effect on the framing of other elements of a visual scene.

Content analysis and visual scene framing systems

In Fig. 4 many different brain structures, which participate in sensory data processing in early and late stages of the visual pathway, are highlighted. These structures are connected with one another in a non-accidental manner as are the transistors on the radio’s circuit board. Particular brain structures (called nuclei, areas, lobules, sulci, gyri etc.) are connected with one another through axons of neurons, i.e. the cords through which nerve impulses travel from one cell body to another. There are structures in this biological system which not only transmit nerve impulses to other structures but also directly or indirectly receive feedback from other structures. Moreover, within different brain structures there are also complicated connections between the neurons that create those structures. The network of all these connections is really complicated. However, on the basis of functions performed by different networks of cooperating neuron clusters that take part in visual data processing, two main systems can be distinguished. Those are: framing system and visual scene content analysis system.

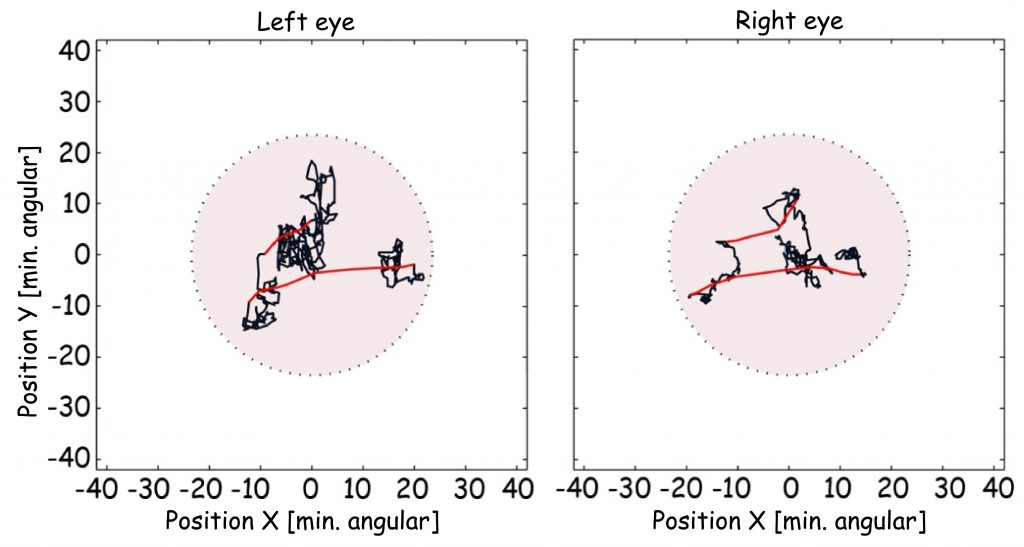

Every vision experience incorporates only a fragment of a larger whole. We see as if in frames. We cannot simultaneously see everything that is happening around our heads. Viewing something is therefore a sequence of frames — the views of things, limited by the field of vision. In order to see the elements of the scene that are out of view, it is necessary to change the location from where the scene is currently viewed or, while remaining in the same location, the position of eyes or head needs to change. This function is performed by the neural visual scene framing system which controls the movement of eyeballs (as well as the movement of the head and the whole body), fixating the visual axes in the most interesting parts of the visual scene.

As one might imagine, the difference between viewing any visual scene and viewing a picture is that regardless of the natural limitations of the field of vision, the picture also has its boundaries. In the case of museum paintings, the boundaries are defined by frames that separate the work’s painted surface from the wall. The frames of the image could just as well be the edges of a cinema screen, television screen, computer screen, the outline of a photo in a newspaper as well as a theatre curtain or, even more conventional yet also existing, the boundaries within which a play in an urban space or a performance hall takes place. Viewing an image requires ignoring anything that lies beyond its boundaries, especially when the natural field of vision also covers that space. The color or texture of the wall where the painting is hung are not a part of the image. Therefore, viewing an image requires, above all, respect for the spatial boundaries the image imposes. Viewing an image and seeing that image in a space such as a museum are qualitatively two completely different acts of vision.

The second important feature of vision is that the frame designated by the field of vision or the frames of the image always has certain meaning. It consists of the elements making up the scene, colors, background, spatial composition, movement. Understanding of a visual scene requires that its features be analysed and confronted with existing knowledge and visual experience. It is implemented through the neural system of image content analysis. The basic function of this system is to lead to a subjective vision experience, although it does not necessarily need to be equivalent to the understanding of what is currently seen.

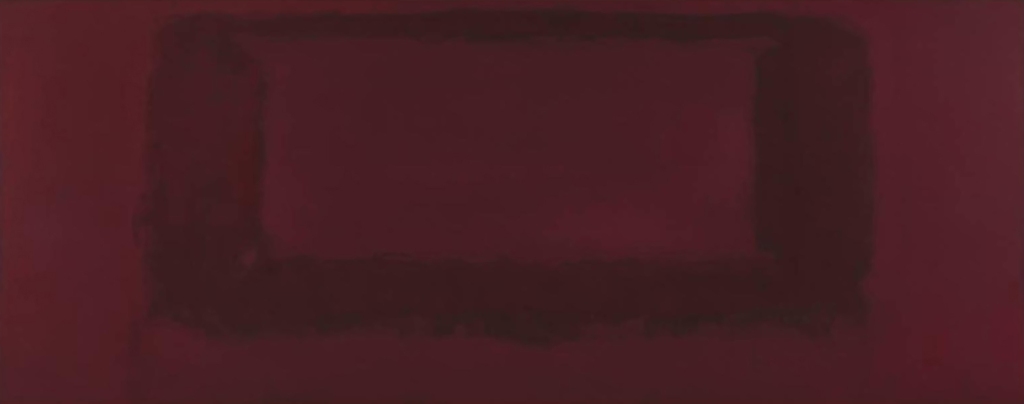

Many people who had the opportunity to see the over four-meter-long painting by Mark Rothko for the first time (Fig. 5) ask themselves what it is all about, or, more radically, why it is even considered to be a work of art. Lack of knowledge, often not just visual, may constitute a serious limitation to the level and depth of image understanding. Nonetheless, regardless of whether one accurately understands what they see in an image, it is certain that the neural system which analyses the content of a visual scene always tries to attribute meaning to what is being seen.

While dividing the visual systems into the framing system and the content analysis system, it is worth adding that both of them operate in the bottom-top and top-down mode of sensory data processing. On the one hand, almost every scene has elements that draw attention more, and, by stimulating the visual scene framing system, they activate the system of analysis of its content in the mode of distanced processing. On the other hand, the same scene may be subjected to a specific analysis, and can be framed depending the task that is being performed at the time by the observer, which is carried out in the top-down mode of sensory data processing.

IMAGE CONTENT ANALYSIS SYSTEM

Visual scene features

A minimum condition for subjective experience of seeing is recording (observation) of shape of a flat (two-dimensional) figure or a three-dimensional object in the space encompassed by the observer’s field of vision. I am not determining at this point whether we initially see objects in two or three dimensions, because it is a dispute that is yet to be resolved (for example Marr, 1982; Pizlo, 2008). However, regardless of that, the shape may well be simple — for example, a point or an outline of a geometric shape — or complex — for example a car driving by.

In the natural conditions we extremely rarely encounter situations in which we do not see any shape in our field of vision, namely things that reveal their distinctiveness by bordering with something else. Dense, total darkness or thick fog may lead us to believe that we cannot see anything. The “anything” simply means lack of the presence of any shape. A shape is a basic definitional quality of each figure or object and their parts as well as a feature of the background and space in which they exist, namely a definitional of every visual scene (Bagiński and Francuz, 2007; Francuz and Bagiński, 2007). The shapes of the things seen are the most important criterion in their categorisation (Francuz, 1990), and constitute the basis for knowledge on what the world looks like. They can determine the boundaries of both named and unnamed objects.

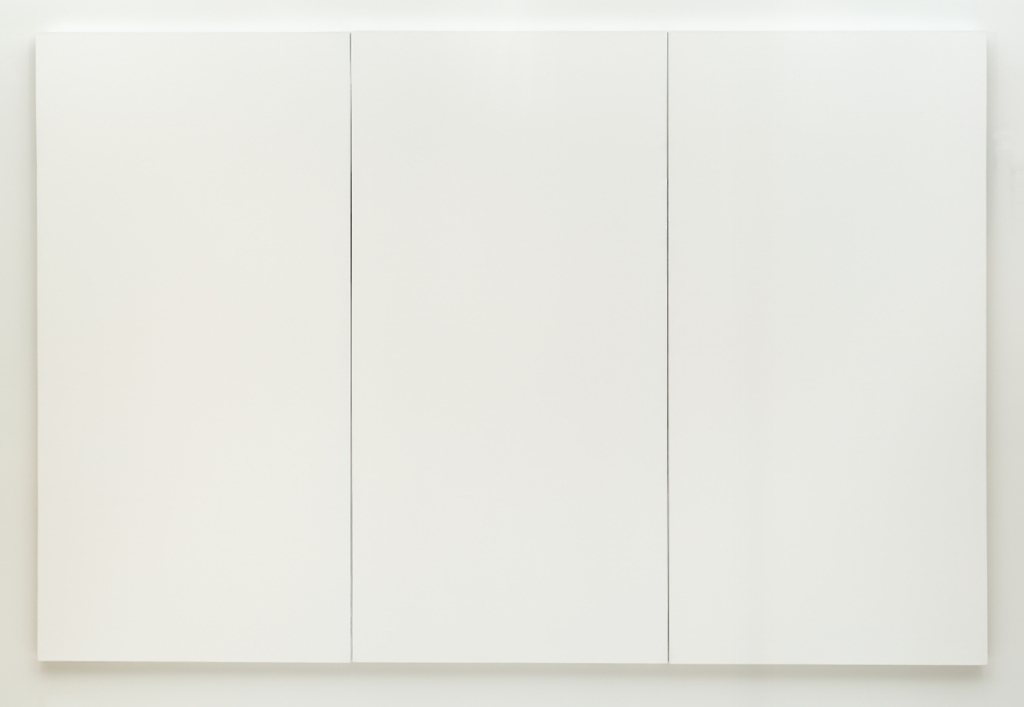

The experience of absence of any shapes in one’s field of vision is quite rare in the natural conditions. The contemporary art, on the other hand, provides a number of model examples of images that present the recipient with such experience.

In 1951, Robert Rauschenberg exhibited a series of provocative paintings titled White Paintings in which nothing whatsoever was painted (Fig. 6). Even the edges of the paintings were marked only by the shadows cast on the wall by stretchers.

White Paintings by Rauschenberg reveal two subtle boundaries; the first one — between seeing the scenery that a painting is a part of and looking at a painting, and the second — between the bottom-up and the top-down process of sensory data processing. On the one hand, the painting in Fig. 6 does not contain any meaning, yet the meaning of the visual scenery in which it is present is constituted by three, rectangular canvas painted white. On the other hand, the paintings provoke the minds of their recipients to fill them with any meaning precisely because of that fact. Similar conclusion in terms of music were drawn by Rauschenberg’s friend, John Cage, who in 1952 composed and performed the famous piece, “4.33” for symphony orchestra. During its performance, no musical instruments produced any sounds. Shapes as well as sounds in musical pieces, are, therefore, categories which refer to both what is registered by the senses (eyes or ears), and what is produced by the minds of observers or listeners.

The second property of every visual scene is the color, namely a specific sensory quality of a figure or object determined by its shape or background. Color can be described using three dimensions: hue, namely the attribute that we usually understand as red or blue color, lightness (or brightness, value), also known as the luminance or color value, characterising color brightness in a continuum between black and white, and saturation (or chroma) namely something that we experience as color intensity. Sometimes gloss, which is a derivative of the surface type or of a material covered with color, is also added to the list. The observed differences between the planes of the painting in terms of brightness (luminance) and hue are important tips regarding the shapes of figures or objects present in a visual scenery. Apart form the listed sensory properties, colors are also attributed with various symbolic values that can modify the meanings of the things we see (Gage, 2010; Popek, 2012; Zieliński, 2008).

The third characteristic of a visual scenery is its spatial organisation in two dimensions or more. If noticing at least one shape that suggests the presence of an object and separates it from the background is a constitutive feature of the scenery, then the object must naturally be located in a given place in the space. Referring to the notion of a place in a visual scenery makes one aware of the fact that it is a representation of some point of view, and that it is limited by the range of the observer’s field of vision, or the frames of the painting.

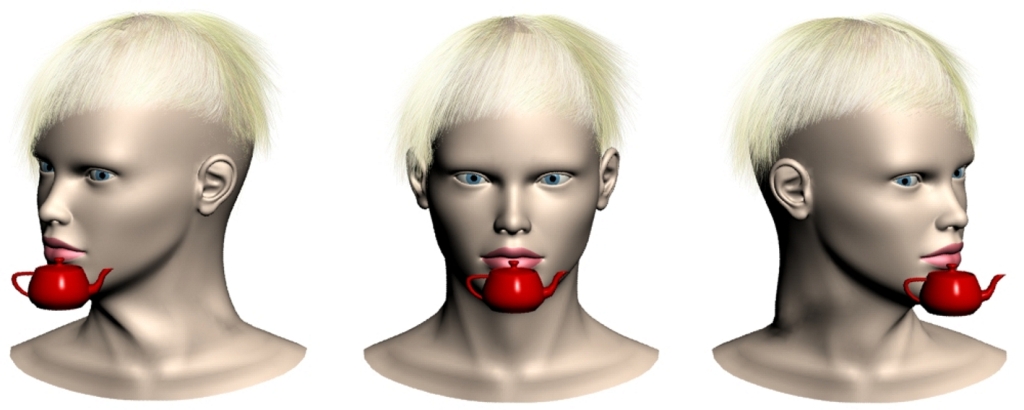

Determinations of object location such as, for example, “on the right” or “on the left, “higher” or “lower”, “closer” or further” both from the observer and from one another, are always relative to the point from which the given composition is seen, and the framework. It is concerns both whole visual scenes and paintings to the same extent. The importance of the observer’s position in relation to the scene that they see is so paramount that one can even speak about their egocentric position in the world of the object seen (Goodale and Milner, 2008). Such favouritism results from thee fact that the observers sees not only objects of a scene, but also relations between them. Relations between objects in a visual scene and on a painting, observed on a plane perpendicular to the visual axis, are intuitively established in relation to the sides of the observer’s body and to the natural frames outlined by their field of vision as well as the frames of the painting. On the other hand, noticing relations between objects along the lines parallel to the visual axis, that is inwards the painting, is not quite so obvious and requires application of special procedures of retinal data processing as well as knowledge on depth indicators, in order to grasp them.

The fourth and the last characteristic of a visual scene is its dynamic. It is a derivative of the velocity, variability, acceleration and movement trajectory of both objects within the visual scene and the observer. Movement of objects within the visual scene destabilises the spatial relations between them. In addition, an observer can change their location in relation to the given scene, thus changing the point from which it is viewed. This concerns not only relocation of the observer in space (for example during window shopping while taking a walk), but also the movement of their eyes, which causes the visual axis to shift from one fragment of the scene to the other. To put it shortly, the movement of objects in the visual scene as well as the movement of the observer while looking at it constitute a huge complication in the analysis of subjective experience of seeing. As stated earlier, the issue of movement within an image, namely within its frames is not the subject of this book, but the movement of the observer, in particular their eyeballs while looking at the image, is.

The listed four features of the visual scene — the shape, the color, the spatial organisation and the dynamics — can be divided into two categories, even thought these categories are not separable. The first one are those properties of the scene that allow the observer to recognise the objects present in it and notice something about their forms and colors. It is the category of objects. Perceptive analysis of the specimens that belong to this category usually does not depend on neither their position in relation to the observer, nor whether they are still or in motion.

On the other hand, spatial organisation and object movement in the visual scene are almost always related to something about which we can say that it has some shape and color. Noticing an object as being located, for example, on the right side of the visual scene results from its location in relation to the observer’s body. These features, however, are not only clearly related to the observer’s body representation, but also to their movement. In general, spatial organisation and object movement in the visual scene create the category of relations.

All the listed categories of visual scene features are not separable, because there are such visual experiences that are located at their interface. A very fast moving object or observer, through a complete blurring of the edges or even colors of things in the visual scene, may trigger the experience of seeing movement that is not a movement of an object of a certain shape. Due to the high speed at which the observer can move, as well as the ability to create images through electronic media, the number of such experiences is constantly increasing. So far, evolution has not developed efficient mechanisms for dealing with such situations. The best manifestation of this are the unexplained mechanisms of many optical illusions in the field of motion perception, created using digital visualisation techniques (see e.g. Michael Bach’s website, Optical Illusions & Visual Phenomena), as well as the illusions, e.g. jet pilots have (Bednarek, 2011).

Vision as an act of creation

David Hubel (Nobel laureate in the field of physiology and medicine in 1981) and Margaret Livingstone’s article, published in 1988 in Science, contains an excellent introduction to the problems concerning the functioning of the neural system of analysing the content of visual scene. Although a quarter of a century has passed since its release, and the research results in the field of cognitive neuroscience have verified most of its hypotheses regarding the processing of visual data, it is still an up-to-date and reliable source of information on the structure and function of individual components of the visual pathway.

The basic finding regarding the function of the visual pathway in the formation of a subjective vision experience is that, starting with the retina’s neurons found in both eyes of the observer and ending with various structures of their brain, the above-mentioned characteristics of the visual scene (such as shape, color, two- and three-dimensional spatial orientation and motion) are analysed by four neural pathways (subsystems), partly independent of one another. This statement is fundamental to understanding how the experience of seeing the scene and the objects inside it, is produced. It shows that data, recorded by photoreceptors, on the distribution of light entering the eye at a given time, are subject to partially independent analyses, conducted by four specialised nervous subsystems, for the most part of the visual pathway. The purpose of their activity is to interpret this data based on the characteristics of the objects and/or their fragments, which are currently in the observer’s field of vision on the basis of their visual experience.

The experience of seeing a complete visual scene is not the result of a simple image reflection projected on the eye’s retina (as, for example, in camera obscura), but takes place in two main phases: (1) decomposition, consisting in the analytical and relatively independent study of the listed features of the visual scene, after abstracting them from the retinal image; and (2) composition, i.e. integrating (synthesising) the results of the analyses conducted in the first phase, taking into account data previously recorded in visual memory.

The presence of both of these phases in each act of vision leads to the conclusion that the result of sensory integration always (to a greater or lesser extent) differs from the recorded source data. This means that the visual experience’s content is constantly produced by the visual system rather than – as it might seem – reproduced from retinal images. In this sense, vision is an act of creation, during which the image of reality recorded by the system of photoreceptors found in the eye’s retina of the observer is constructed.

Early analysis system of visual scene content

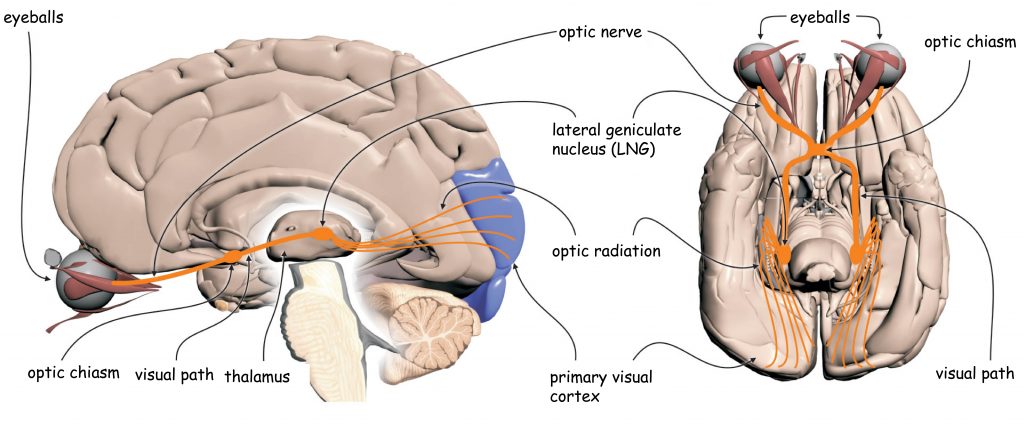

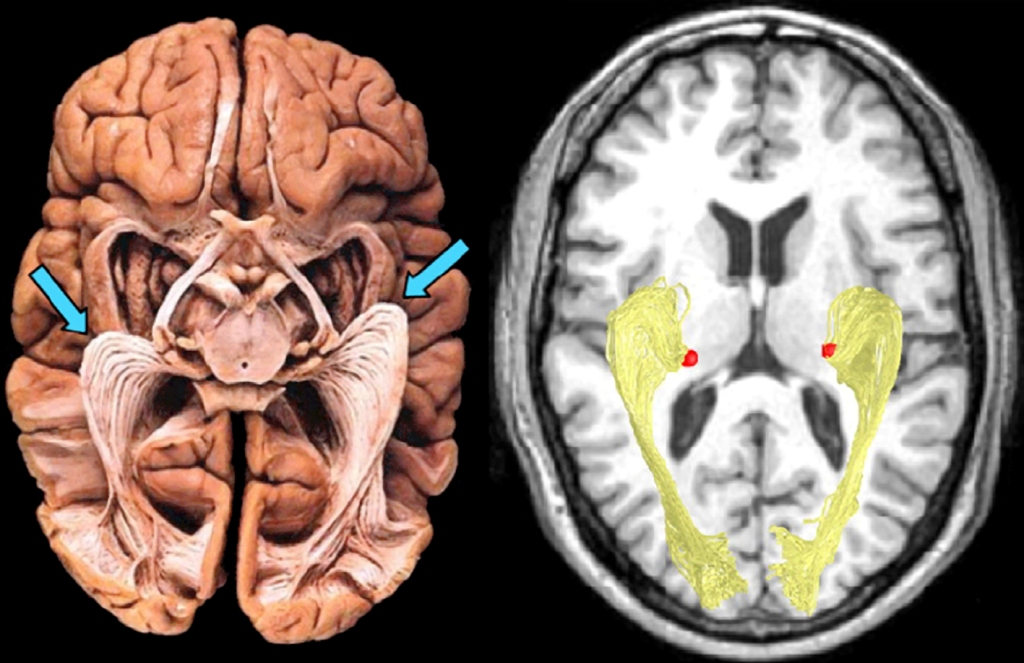

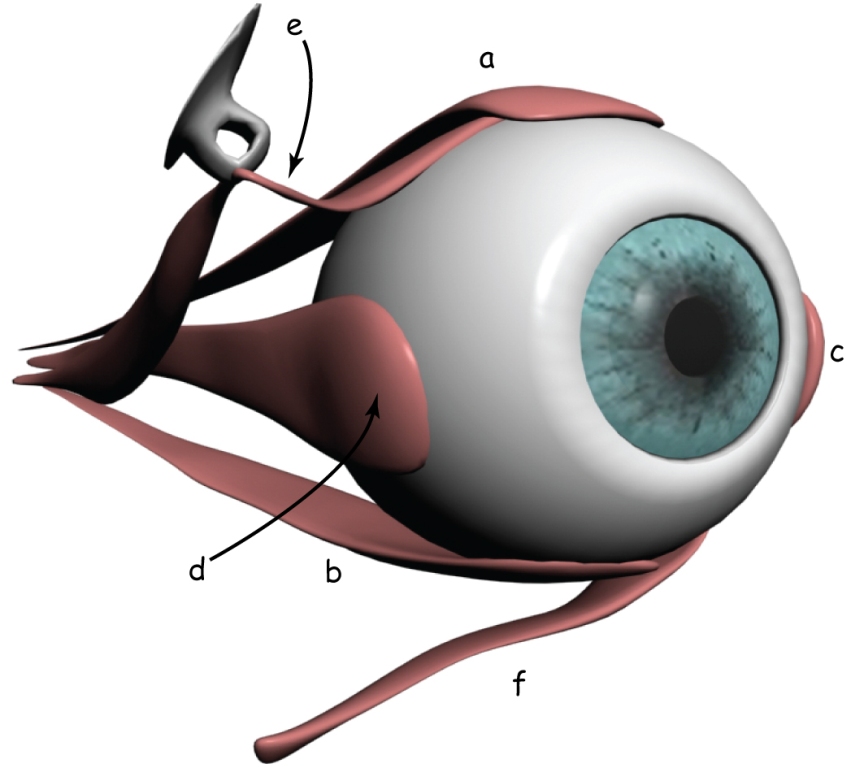

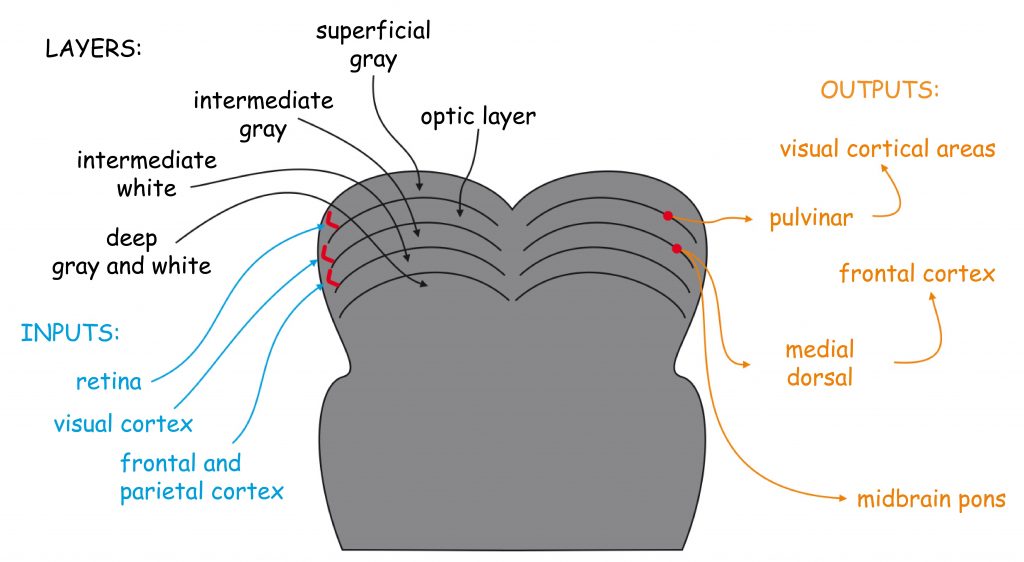

The photoreceptors located in the retina inside the eye record the distribution of entering light. This is the first stage of the visual scene content analysis procedure. The most important neural structures that are involved in the recording and arrangement of sensory data in the early stages of the visual pathway are shown in Fig. 7.

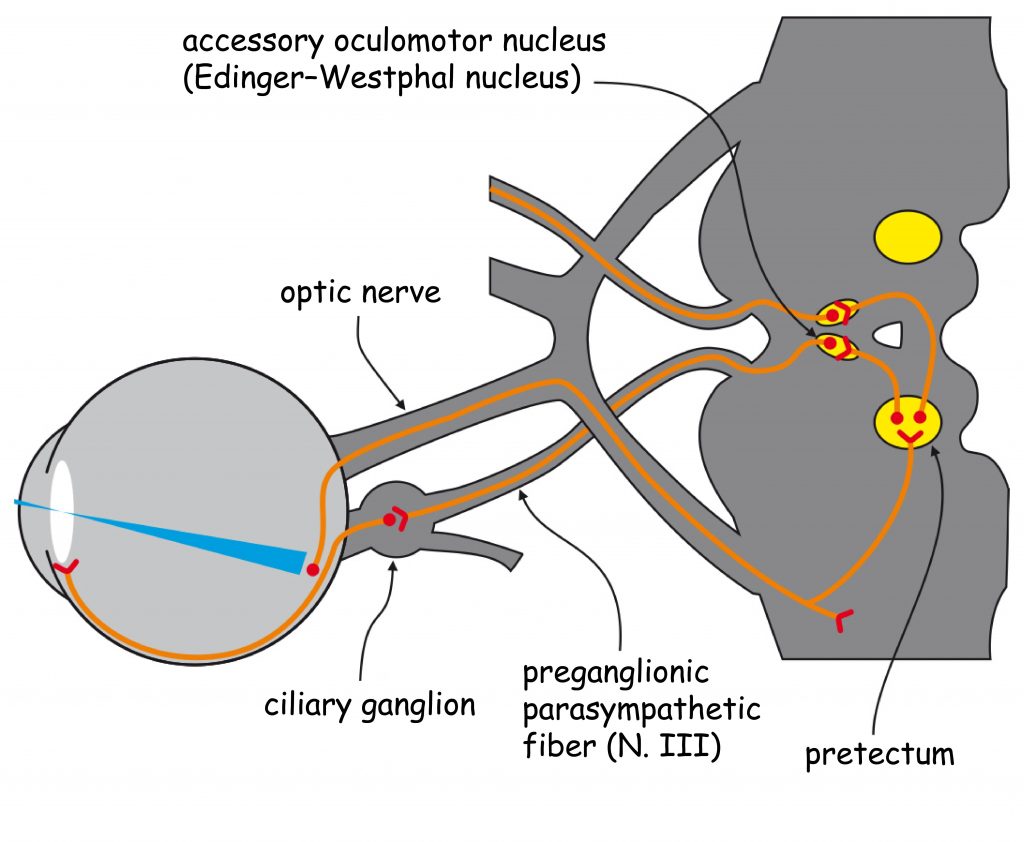

The early analysis system of visual scene content essentially consists of two separate structures: the eye, in particular the optical system (i.e. the lens that is in its front part and the retina located on its back wall inside the eye) and the lateral geniculate nucleus (LGN), placed halfway between the eyes and the cerebral cortex, in a place called the thalamus.

In the retinas of the eye there are, among others, so-called ganglion cells. Their axons (projections) over which, like a telephone wire, nerve signals are transmitted into the brain, form an optic nerve. In the area between the eyes and LGN there is an optic chiasm – a place where a bundle of axons carrying nerve signals from each eye splits into two parts. A half of the axons from the left eye connect with a half of the axons from the right eye (similarly, the other half of the ganglion cells axons exiting the both eyes) and continue their pathway together to enter the right and left brain’s hemispheres. The area between the optic chiasm and LGN is called the optic tract.

Together with LGN, nerve impulses are transmitted to the so-called primary visual cortex or striate cortex in the occipital lobe of the brain via the axons of a large group of cells, whose bodies are found in LGN. This area is called optic radiation and broadly closes the first stage of sensory data transmission and sensory data processing in the visual pathway.

Eye – the camera metaphor

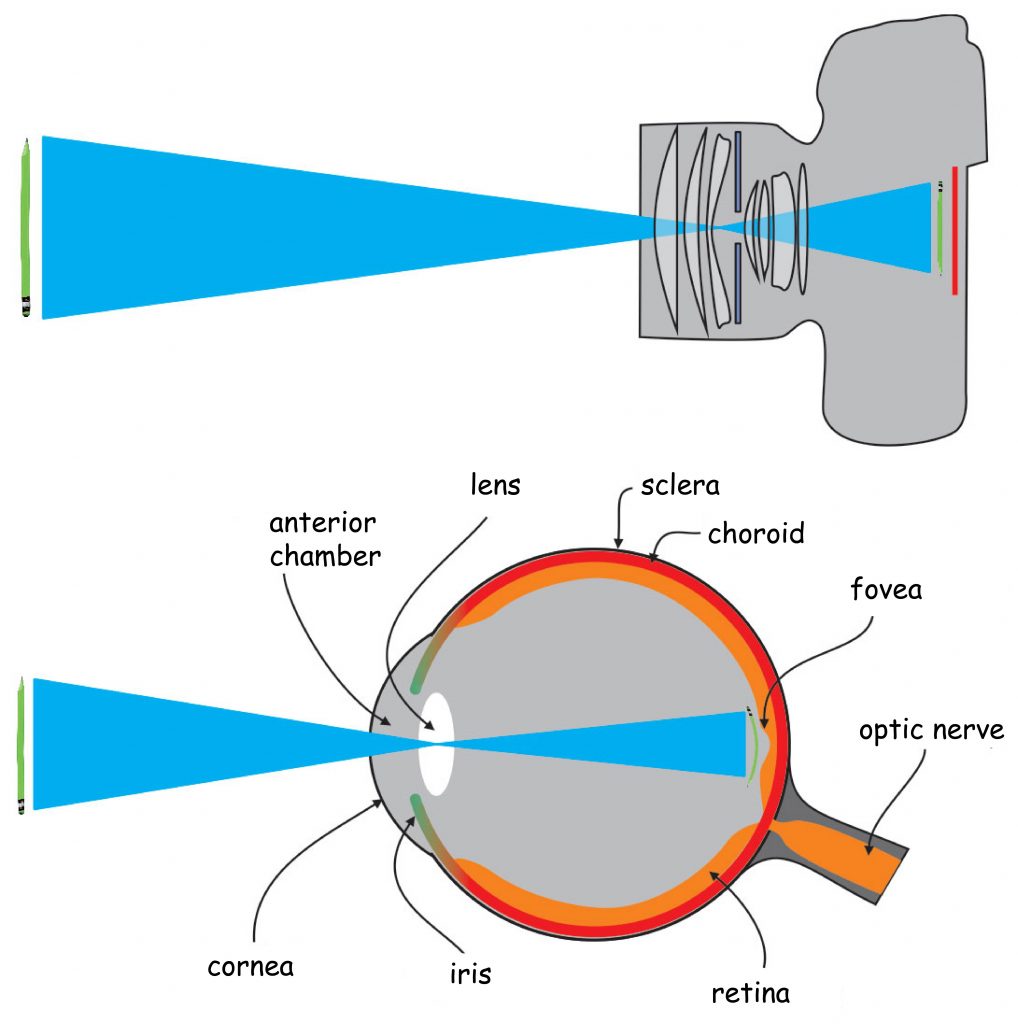

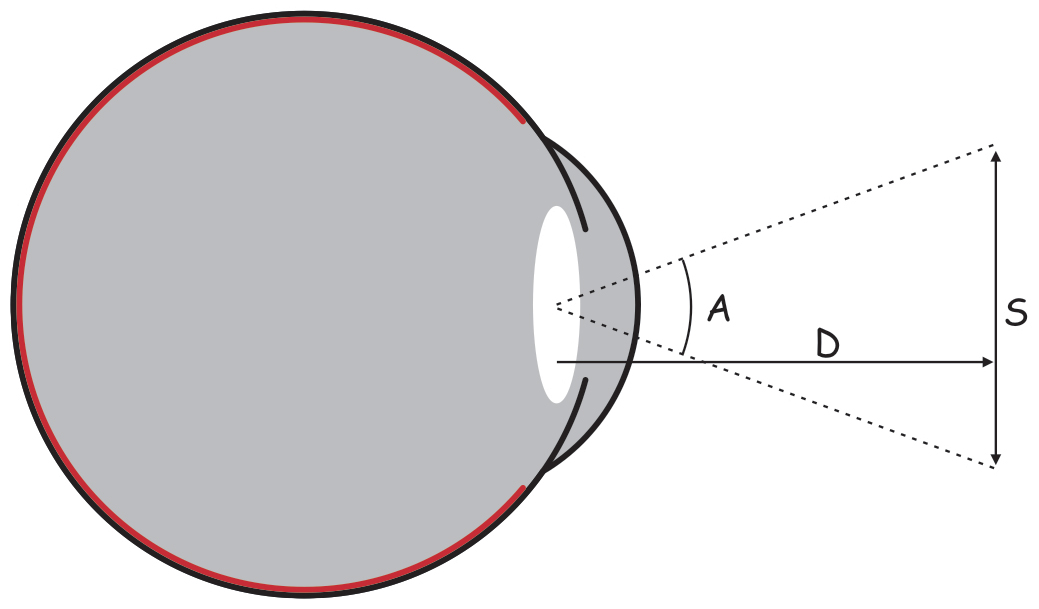

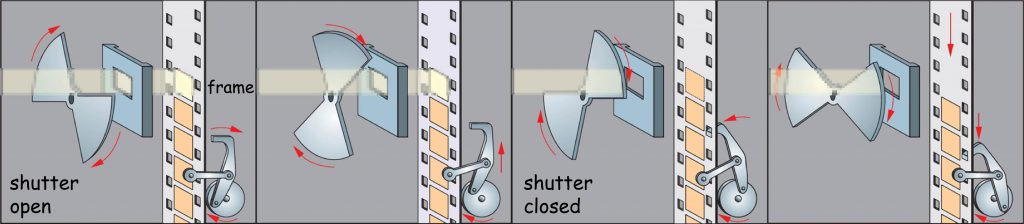

In many ways, the structure of the eye and the camera are similar. Before pointing out the main differences between them, it is worth to have a closer look at this analogy (Fig. 8).

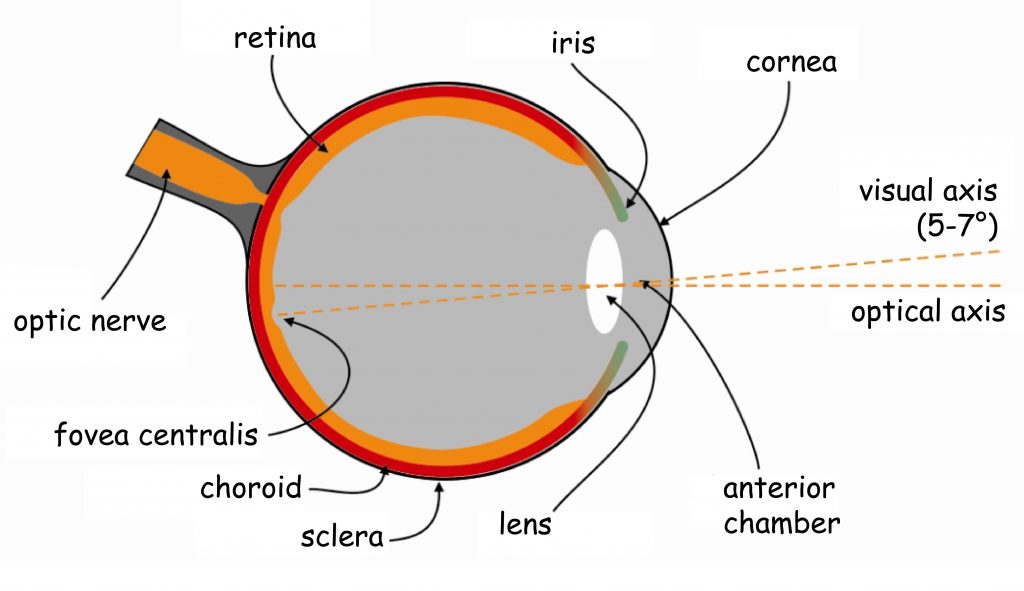

The visual pathway begins in the eye. Like the camera, the eye is made up of a hermetic and rigid body. In the eye, it is called sclera. It protects the eyeball against mechanical damage and to stabilise its shape.

The front of the eye has an optical system, i.e. the biological equivalent of a camera lens. The most outer element is the transparent cornea, which, like the sclera, protects the eye from the mechanical damage. It also acts as a sort of protective filter and fixed focal length lens. Just behind the cornea is a pin-hole visible for the lens, called the pupil, whose diameter is adjusted by means of the aperture, i.e. iris.

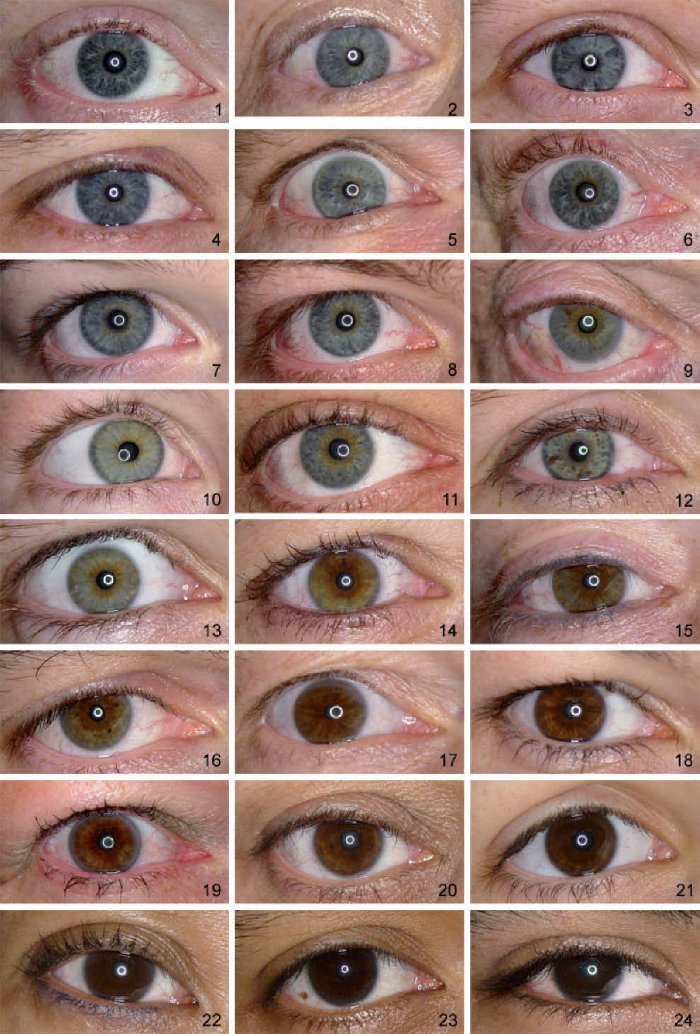

Incidentally, unlike the aperture on the camera, the iris is colorful: most often brown (in various shades), but it can also be grey, green or blue (Fig. 8.1).

Behind the iris, one of the most extraordinary organs in our body is placed a varifocal lens. Ralf Dahm (2007) calls it a “biological crystal”. The eye’s lens has two properties distinguishing it from the optical system in the camera. Firstly, it has excellent transparency, allowing transmitting almost 100% of light to the inside of the eye, provided that it is fully working. The eye’s lens is also varifocal, which enables the observer to see objects clearly at different distances from it. The mechanism of changing the focal length of the eye lens is nothing like the focal length change in the camera lens.

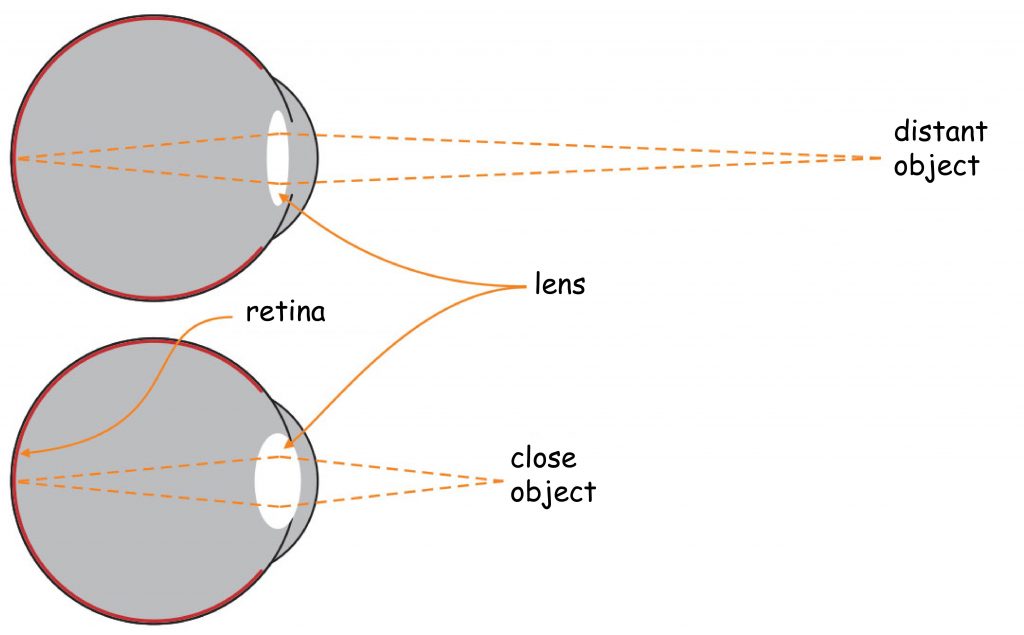

Maintaining visual acuity for objects at different distances from the optical system of the eye is ensured by the ability to change the shape of the eye lens. The closer the object is to the observer’s eye, the thicker the lens becomes. The further the object is to the observer’s eye the thinner it is (Fig. 9).

The lens is attached to the inner part of the eyeball with ciliary muscles. They stretch the lens as they contract, making it thinner, while they make the lens swell in its inner part as they become relaxed. The effect of focusing light rays on the back wall of the eyeball is associated with the change in the angle of refraction of light rays on the lens according to its thickness. A thicker lens bends light rays at a larger angle than a thinner one. This phenomenon is called eye accommodation.

On the opposite wall of the eye’s optical system, a photosensitive matrix, i.e. retina, is found. It covers about 70% of the inner surface of the eyeball. The light reflected by objects in the visual scene or emitted by them illuminates the bottom of the eye and creates its retina projection. It is characterised by the fact that it is: spherical, smaller and turned upside down in relation to the original (provided that we know what the original looks like). Nevertheless, such distortion are not a major problem for the brain.

If the observer has a fully functional optical system, the whole image of the visual scene is projected onto the retina’s surface with very high accuracy. This image is sharp and clear. Unfortunately, this does not mean that the retina reflects it in every place with the same quality on which it is projected. Due to the way in which the distribution of light reaching the retina is analysed, it can be compared to a heavily damaged cinema screen, which in many places is undulating, unclean, and even pitted in some places. To put it briefly, although the eye and the camera have many common features regarding their structure, their functioning is almost completely different (Duchowski, 2007). The sensors in the camera’s matrix will record each light parameter with the same quality, on the contrary to photoreceptors in the retina.

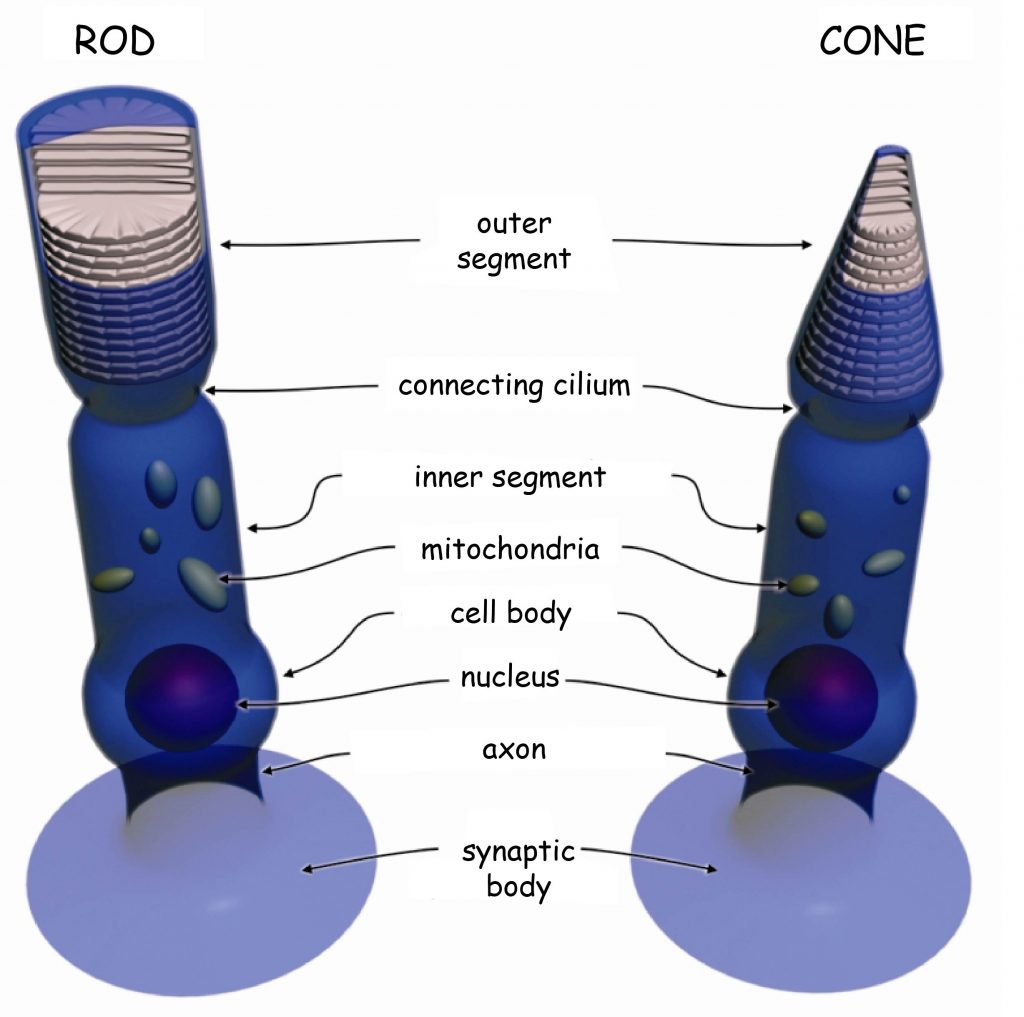

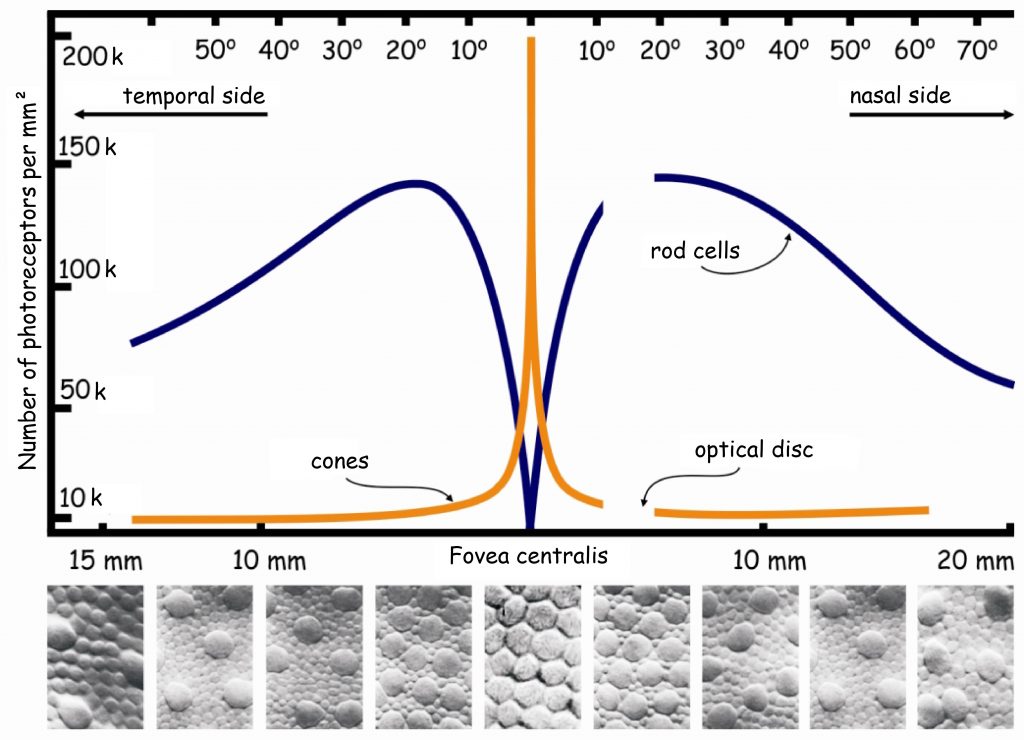

There are two types of photoreceptors in the retina, i.e. photosensitive cells. They are: cones and rods. The names of these receptors originate from their shapes: cones resemble tapers and rods – cylinders (Fig. 10). The rods are incomparably more sensitive to light entering the eye than the cones, which is why the cones work during the day and “fall asleep” at night, adapted to the dark. On the contrary to the cones, the rods “sleep” during the day, adapted to the light, and are active at night (Młodkowski, 1998).

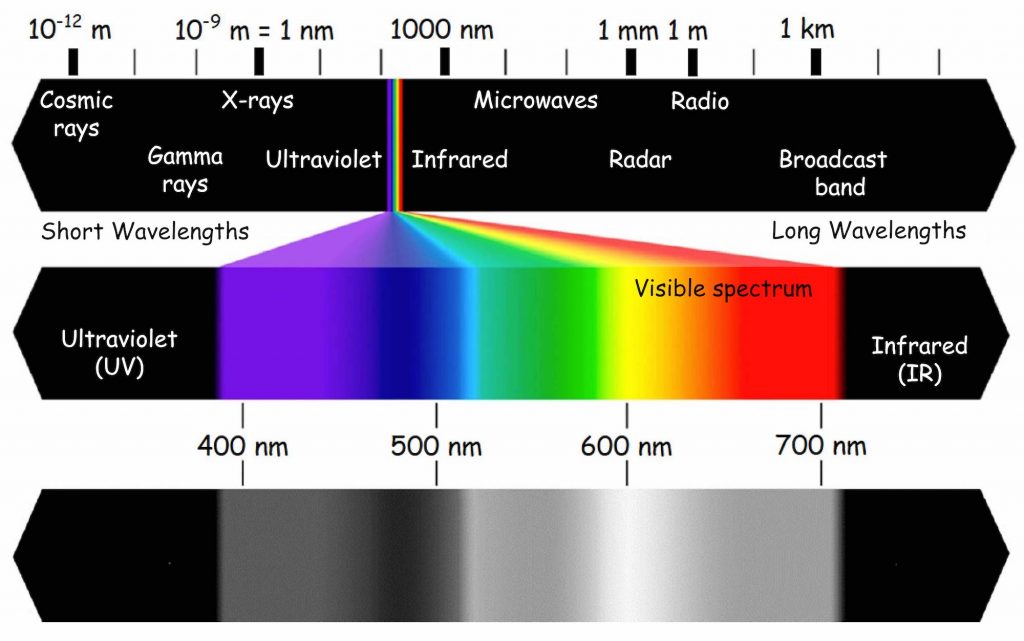

The cones react differently to various electromagnetic wavelengths in the visible light range, i.e. approximately from 400 to 700 nanometers. There is a close relationship between electromagnetic wavelength and color vision (Fig. 11). The cones also react to the intensity of the light wave. The rods, in turn, do not differentiate colors, but are particularly sensitive to the brightness (intensity) of light. The image reflected by the rods is achromatic. This means that when it gets dark and the rods acquire control over vision, we stop differentiating colors while still differentiating shades of grey. Obviously, this rule applies only to the colors that cover the surfaces on which the light is reflected, not those that emit it. At night, we see colorful neon lights, because the light they emit stimulates the cones. However, we cannot see the difference between the green and red paintwork of two adjacent cars in a dark street, because they reflect more or less the same light in low light conditions and the rods will react to them in a similar manner.

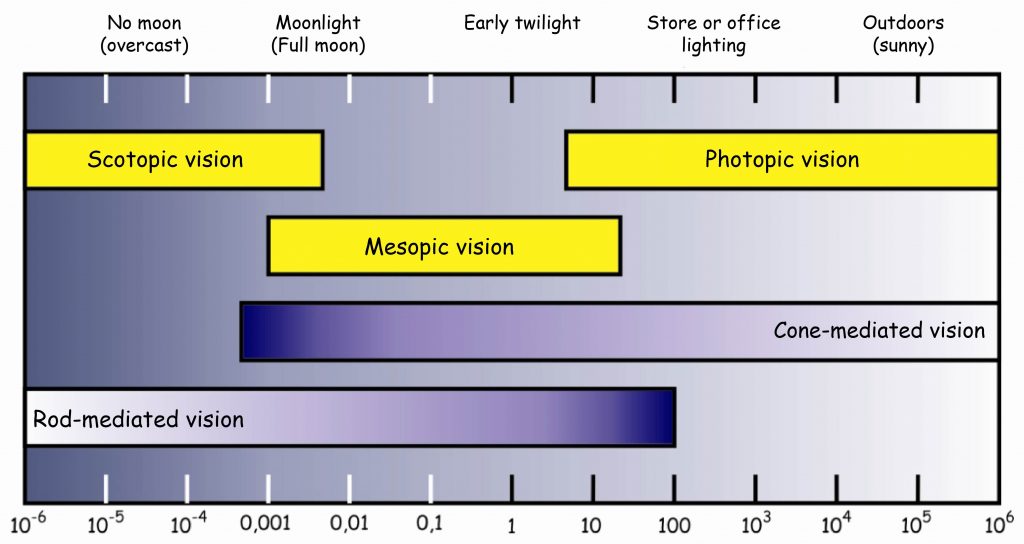

The vision under very good lighting conditions is called photopic vision and the cones primarily participate in it, whereas the vision in low light conditions is called scotopic vision and it is the result of rod activity.

Both types of photoreceptors work at twilight, early in the morning or in a moonlit night. The darker it is, the weaker the reaction of the cones becomes, and the rods awaken from the daytime slumber and react increasingly intensely. On the other hand, the brighter it gets, the more intensely the cones react to the light, whereas the reaction of the rods decreases. At this point, we are dealing with the so-called mesopic vision (Fig. 12). It is a time that is particularly dangerous for drivers, due to the fact that neither of the retina systems is 100% functional then. Brightness, which conditions activation of certain vision systems, is expressed using units known as candelas per square meter. Without going into details, one candela corresponds, more or less, to the light at twilight, right after the sunset.

Seeing images is possible primarily thanks to the cones which are responsible for seeing in good light conditions, and for this reason we will take a particularly careful look at them.

Distribution of cones within the retina

There are approximately 4.6 million cones in the retina of an adult person (Curcio, Sloan, Kalina and Hendrickson, 1990). Their largest cluster is located in the spot where the retina is crossed by the visual axis. Its other end, on the other hand, crosses the spot on which we focus our eyes. The visual axis is inclined at approximately 5° to the optic axis of the eye, running through the middles of all elements of the optical system of the eye, namely the cornea, the pupil and the lens (Fig. 13).

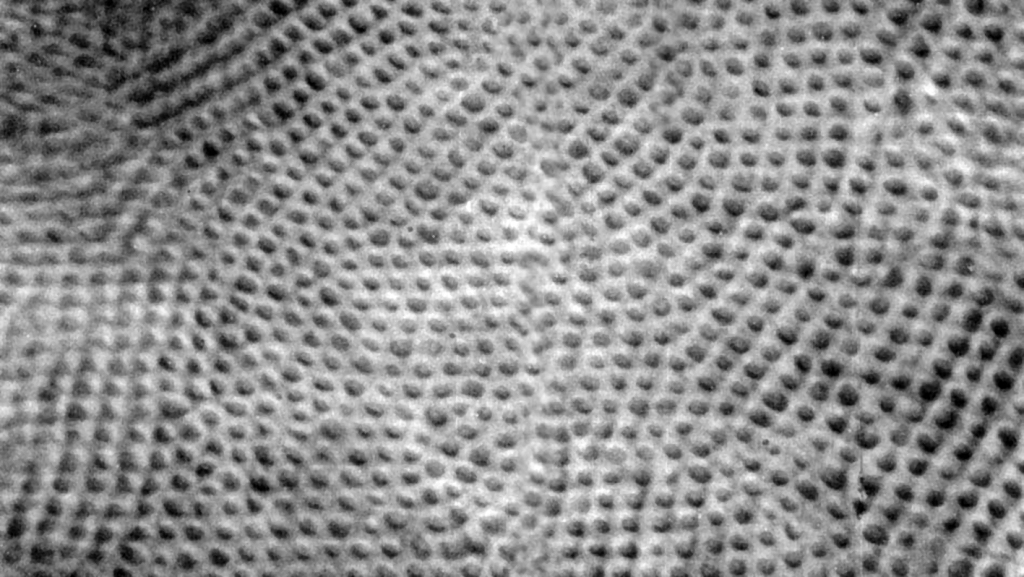

The intersection of the visual axis and the retina is a small elliptical area with chords of approx. 1.5 mm vertically and 2 mm horizontally and with a surface area of approx. 2.4 mm2 (Niżankowska, 2000). This area is called the macula and there are over half a million cones packed there, i.e. over 200 thousand /mm2. For comparison, there are only 3–4 pixels on a 1 mm2 LCD screen with a resolution of 1920 x 1200. That’s 50 thousand times less than in the central part of the eye!

Inside the macula, there is an even smaller area of approximately 1 mm², known as the fovea. Inside, in the foveola, the number of cones can reach up to 324 thousand /mm2. In adults, there are about 199,000/mm2 of them in this area (Curcio et al., 1990), and the further away from the fovea, there are less of them (Fig. 14).

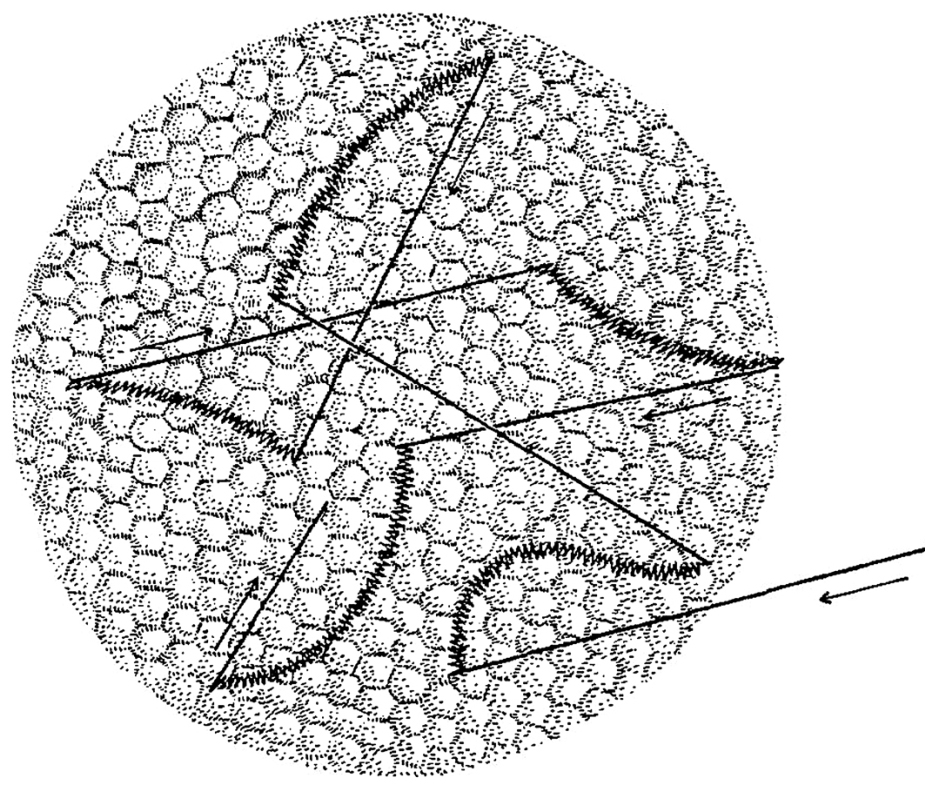

If it were possible to use cones from around the middle of the fovea to build a small image matrix of 36 x 24 mm for a digital camera, its resolution would be not 12 or even 30 megapixels, but approx. 280 Mpix! The accuracy of the image recorded by cells located within the area of the fovea is unimaginably high. In Fig. 15, one can see the retina’s surface near the center of the fovea, with visible cones in the shape of blobs (Ahnelt, Kolb and Pflug, 1987). This is approximately how the surface of the entire retina-screen, on which the image opposite the eye is projected, looks like. In different areas, there may be only a slightly different density of photoreceptors. What the brain learns about the world through the eyes is directly related to the activity of these small-screen points.

The surface of the fovea constitutes only 0.1% of the entire surface of the retina, while the surface of the macula — 0.3% (Młodkowski, 1998). There are no rods in there, and the cones located in the area constitute 1/8 of all cones in the retina. The remaining 4–5 million cones are distributed on 99.7% of the retina surface around the macula. It means that there are approx. 7 thousand of them per 1 mm² of the retina, beyond the macula (Hofer, Carroll and Williams, 2009). This is still quite a lot, but there are almost thirty times fewer cones on most of the retina than in the fovea.

As it is easy to guess, the direct consequence of the described distribution of photoreceptors on the retina is that depending on the place of its lighting, the projected image is processed with a different spatial resolution. In other words, the brain derives much more data that allow it to more clearly reconstruct the image of the visual scene from sites with a higher density of photoreceptors, than on the basis of data from those areas of the retina that are poorer in photoreceptors.

A few words about rods

As has already been highlighted, in addition to the cones, there are other photoreceptors, i.e. rods, in the retina of the human eye. Their number ranges from 78 to 107 million depending on the person (on average around 92 million). There are therefore 20 times more rods than cones (Curcio et al., 1990). This means that the retina is much less “hardware” equipped to see the world in colors under full lighting than in a monochrome way and in the dark. It is probably a remnant of our predatory ancestors, who did not really care about seeing the world in colors and definitely preferred hunting at night rather than during the day. Well, we inherited color vision from our ape ancestors, who preferred to eat during the day, carefully looking at the color of a banana or mango peel. This was crucial, at least as regards indigestion.

There are no rods in the fovea and the first ones occur only in the regions of the macula. The further away from the macula, the more rods there are. The largest number of rods is located about 20° from the fovea and is comparable to the number of cones in the macula, i.e. approx. 150k /mm2 (Fig. 14). Moving further in the direction of the peripheral retina, the number of stamens gradually decreases and there are half as many stamens on the edges of the retina, i.e. approx. 75k/mm2.

Such a distribution of rods causes that in poor lighting conditions, we can see something relatively clearly not looking directly “out of the corner of the eye”, but more precisely, moving the centre of the optical system of the eye by approx. 20 angular degrees from the place we want to see precisely. Only then will the image projected on the retina be interpreted with the highest possible resolution.

Retinal hole

Finally, I would like to make a few more points concerning one structural detail of the retina. Approximately 15° away from the fovea, in the nasal retina of each eye, there is a literal “hole” with a diameter of 1.5 mm and a surface of approx. 1.2 mm2. This area is called the blind spot or the optic disc, and it contains no photoreceptors. It houses the optic nerve, which transmits signals concerning the state of photoreceptor stimulation to the brain, and blood vessels necessary for the oxygenation of cells inside the eye. In this area, the image projected onto the bottom of the eye hits emptiness. This is very easy to discover yourself. All you need to do is close your right eye and look at one of the crosses on the right-hand side of Picture 16 with your left eye, and then slowly approach and move away from it. At a certain angle, you will find that the circle located on the left-hand side becomes invisible, and the break in the line disappears. This occurs due to the fact that the image of the point or break in the line is projected onto the blind spot.

Specialized ganglion cells

The bottom-up analysis of visual scene content in the early stages of the visual pathway is possible thanks to the presence of not only photoreceptors but also different types of neurons in the retina of the observer’s eyes, of which the aforementioned ganglion cells play a particularly important role. They are specialized in the processing of data concerning (1) the wavelength of visible light, which is the basis for color vision, (2) the contrast of light brightness, which enables us to see, among other things, the edges of things or parts of things, i.e. shapes in general, (3) the variability of lighting in time, which is the basis for seeing movement, and (4) the spatial resolution, which lies at the root of the visual acuity.

Among the many types of ganglion cells, it is possible to identify those that are particularly sensitive, e.g. to the wavelength of visible light corresponding to green color. This means, more or less, that if these ganglion cells send nerve impulses towards the cerebral cortex, like in the Morse code, then the observer sees something green. When the cells responsible for movement detection are activated, the brain “learns” that something is changing before the eyes of the observer, although on the basis of this information it does not yet “know” whether it is moving in the visual scene, the observer is moving, or both. It will “find out” about it as well, however, by analysing data from other senses. Suffice it to say that seeing one property or another of a picture directly results from the condition of neural transducers and transmitters of sensory data. Damage to them may mean that a certain property of the painting may go unnoticed, as if it was not there.

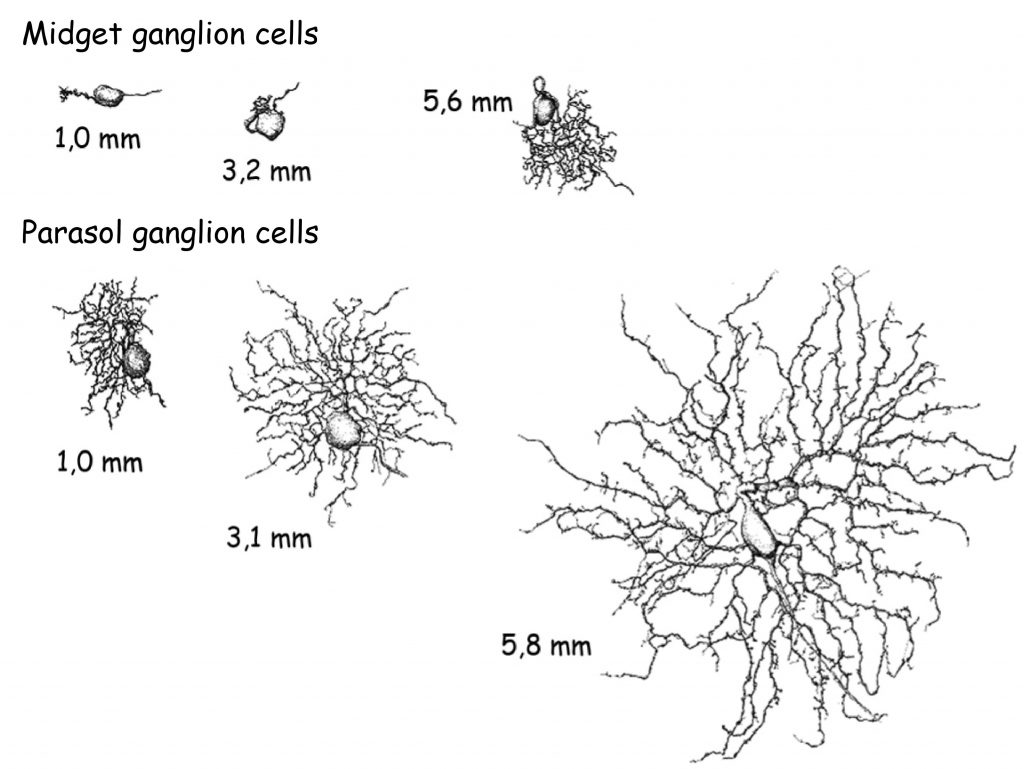

Upon taking a closer look at the anatomy of ganglion cells it turns out they are essentially divided into three groups. The first group consists of midget ganglion cells, with small bodies and a relatively small number of branches, i.e. dendritic trees and an axon. The second group consists of parasol ganglion cells, with large bodies and a much greater number of branches (Fig. 17). The third group consists of bistratified ganglion cells, with tiny bodies and disproportionately large branches compared to their body size, though still considerably smaller than the branches of parasol ganglion cells or even midget ganglion cells (Dacey, 2000).

Although midget ganglion cells are smaller than parasol ganglion cells, their magnitude, and especially the number and spread of dendritic trees, which allow them to receive nerve impulses from other cells, depends on their distance from radial fossa. The closer they are to radial fossa, the smaller they both are.

Apart from dendrites, each neuron also has an axon, which is an offshoot that transmits nerve impulses from the body of a given cell to another. It is the axons of all three types of ganglion cells that make up a fundamental frame of an optic nerve. It consists of approximately 1 million (from 770 thousand to 1.7 million) axons of ganglion cells (Jonas, Schmidt, Müller-Bergh, Schlötzer-Schrehardt et al., 1992) and somewhat resembles an electrical cord made up of copper wires.

The number of axons of the three mentioned types of ganglion cells in an optic nerve is not the same. The majority, approximately 80%, are the axons of midget ganglion cells, and only approximately 10% are the axons of parasol and bistratified ganglion cells. The fibres of small cells (i.e. midget and bistratified ganglion cells) therefore consist of approximately 90% of all axons forming the optic nerve. It means that, for some reason, data transmitted by smaller ganglion cells are more important for the brain than data transmitted to it via larger (parasol) ganglion cells.

Ganglion cell bodies are located in the retina of the eye. Their dendrites receive data from photoreceptor via different cells, but those will be discussed later. In any case, due to a smaller number of dendrites, midget ganglion cell connect with a much smaller number of photoreceptors and other cells in the retina than parasol ganglion cells which have large dendritic trees. What is important, however, is that small ganglion cells (midget ganglion cells and bistratified ganglion cells) connect mainly with the photoreceptors located in the central part of the retina. They are therefore much more sensitive to the spatial resolution of the retina’s lighting than parasol ganglion cells. Thanks to midget ganglion cells we can very accurately differentiate between shapes of different objects. The only issue is that their greatest cluster covers a relatively small area of retina and as a result – a small field of vision.

Another property of small ganglion cells is their sensitivity to light wavelengths. More than 90% of them specialises in that field, originating the processes of vision and color differentiation. Almost all midget ganglion cells perfectly differentiate electromagnetic wavelengths corresponding to green and red colors, but they perform much worse when it comes to the opposition of yellow and blue. However, this task is performed by bistratified ganglion cells. They are the ones that play an essential role in the processing of data related to differentiation of yellow and blue colors (Dacey, 2000).

As opposed to small ganglion cells, parasol ganglion cells do not differentiate light wavelengths, but are much more sensitive to edge detection between planes of similar brightness than midget ganglion cells. They are able to register a 1–2 percent difference in the brightness of juxtaposed surfaces, and 10–15 percent differences in brightness are registered without any problem (Shapley, Kaplan and Soodak, 1981).

Midget ganglion cells require a much greater difference in the brightness of juxtaposed planes in order to register it. Moreover, parasol ganglion cells cover a much larger surface of the retina than midget ganglion cells. Both these properties of large ganglion cells perfectly complement the limitations of small cell in terms of edge detection of things in the observer’s field of vision outside fovea and in regards to spatial relations between them.

There is another important difference between small and large cells. Large cells have axons much thicker than those of small cells and that is why they send nerve impulses two times faster, i.e. at speed of approximately 4m/s, than midget cells. That property of parasol cells is of key importance for the detection of changes in the lighting of retina, which allows the observer to recognise movement. Visual movement detection of an object (as well as the movement of the observer) is tantamount to the same or similar light and shadow arrangement moving across the retina in time. The pace and direction of the movement of an image on the retina is an indicator of speed and direction of movement.

While finishing this functional characteristic of large and small neurons, it is worth noticing that, similarly to the traits of almost every visual scene, these can also be connected into two categories. Midget ganglion cells and bistratified ganglion cells are especially sensitive to color and spatial resolution of light which constitutes the basis for the perception of shapes of things in a visual scene and differentiating them from one another. It could be said that it is thanks to their activity we have a chance to separate the objects which form a visual scene from ourselves and the background. It’s the most basic function of vision and that is why the axons of midget ganglion cells and bistratified ganglion cells are so numerously represented in the optic nerve.

Parasol ganglion cells, on the other hand, thanks to high speed of signal transmission and much greater sensitivity to different shades of brightness in the visual scene than small cells, make it possible to see the movement and spatial organisation of the viewed scene, and also very effectively support the process of identifying edges of things.

Thus, the anatomical structure and physiology of the ganglion cells result in their specific functions, and the foundations of the subjective experience of viewing the image begin to emerge from them. We view the images the way we do because this is what our biological hardware is like, not due to the fact that they are like this.

From the retina to the lateral geniculate nucleus

The first structure in the brain to receive information from the retina of the eyes is the lateral geniculate nucleus (LGN) located in thalamus. Already in the 1920s, Mieczysław Minkowski, a Swiss neurologist of Polish origin, discovered that axons of small and large ganglion cells connect with LGN in a surprisingly orderly manner (Valko, Mumenthaler and Bassetti, 2006).

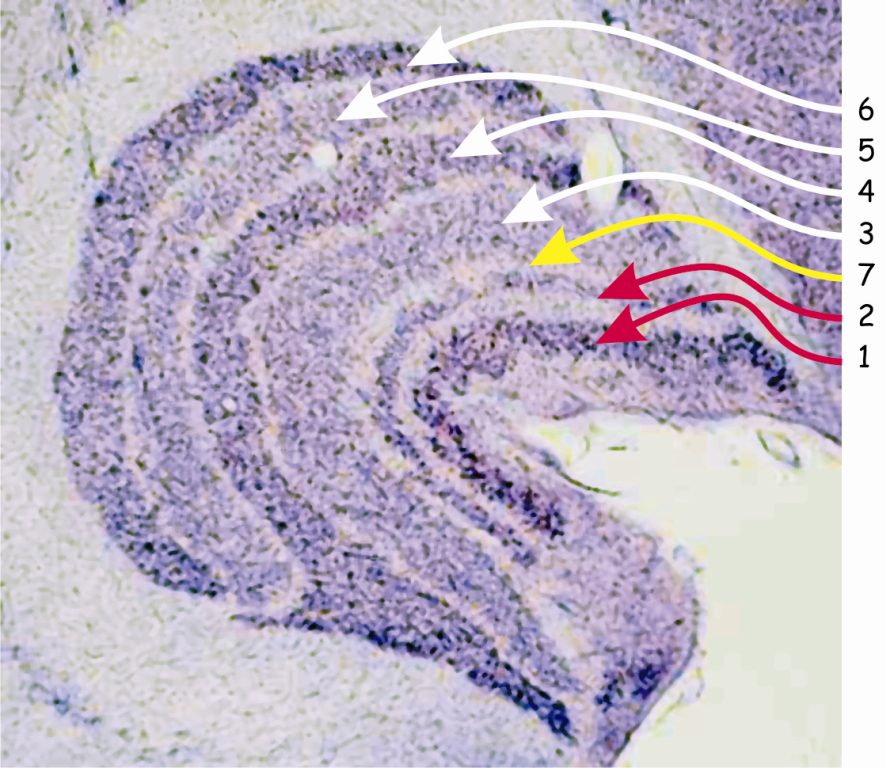

The structure of LGN resembles a bean grain, at the intersection of which six distinctly separated layers of neurons characterised by two different sizes are revealed (Fig. 18).

Darker layers of cells numbered 1 and 2 receive nerve impulses through the axons of parasol ganglion cells. Since the cell bodies forming these layers in the lateral geniculate nucleus [LGN] also have relatively large sizes, these layers are called the magnocellular layers, M‑type (magno) or Y‑type cells.

Layers marked with numbers 3–6 receive signals from axons of small ganglion cells and since they are also composed of cells with small bodies, they are called parvocellular layers, P‑type (parvo) layers or X‑type layers. The anatomy and functional traits of small and large ganglion cells located in the retina and the cells forming specific layers of the lateral geniculate nucleus are almost identical. Therefore, among researchers involved in the issue of vision, there is a consensus that umbrella ganglion cells and in two layers of LGN — M‑type cells are part of the so-called magnocellular pathway, while midget ganglion cells and in four layers of LGN — P‑type cells determine the so-called parvocellular pathway.

In Fig. 18, the seventh layer located between the magnocellular and parvocellular layers, i.e. the second and third, is also marked. In this layer there are koniocellular cells or otherwise — K cells, which receive projections from ganglionic koniocellular cells. Due to the existence of this layer in LGN, the third visual pathway, the so-called koniocellular pathway, should be added to the two previous ones. Since the cells in the koniocellular pathway constitute only about 10% of all ganglion cells and because they perform analogous functions to those performed by midget ganglion cells, the koniocellular pathway is treated as part of the pathway.

Summarising the properties of the magnocellular and parvocellular pathways of vision, it is worth to take a look at the following list.

| Characteristics | Magnocellular pathway | Parvocellular pathway |

| The size of the ganglion cell body | large | small |

| Size of the receptive field of ganglion cells | large | small |

| Speed of a nerve impulse transmission | fast | slow |

| Number of axons in optic nerve and tract | small | large |

| Color differentiation | no | yes |

| Contrast sensitivity | small | large |

| Spatial resolution | small | large |

| Time resolution and movement sensitivity | large | small |

| Sensitivity to differentiation of brightness of planes lying next to each other | large | small |

Breakdown of the layers in LGN according to the optic chiasm

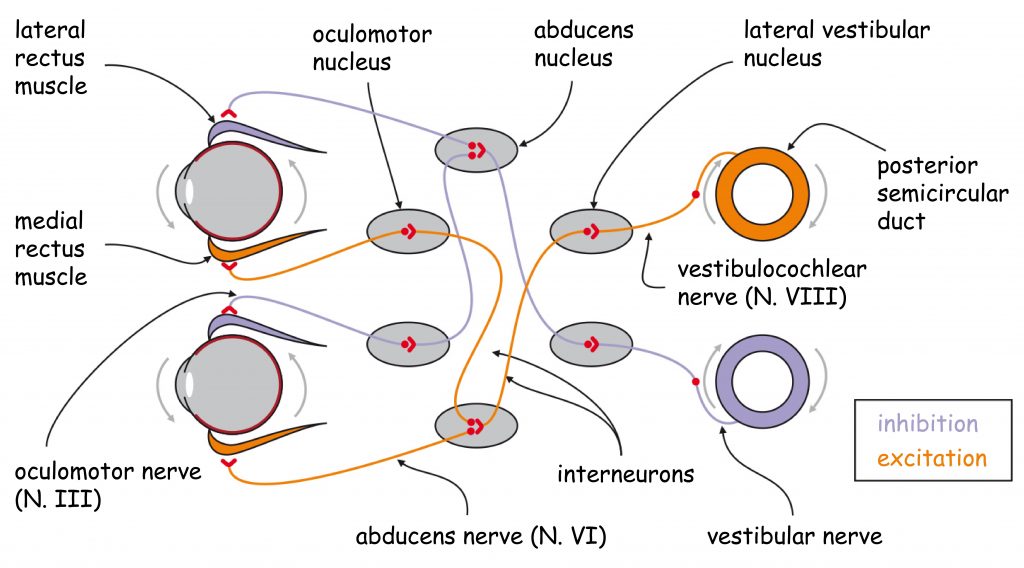

LGN layers are divided not only into koniocellular, magnocellular and parvocellular, but also into right and left ones. Like most cerebral structures, LGN is an even body, i.e. it is found on both the right and left side of the brain. In primates, including humans, there is an aforementioned optic chiasm (Fig. 19) between retinas and LGN. Optic chiasm is the area where the ganglion cell axon bundles are divided into two parts. This is a very clever evolutionary invention, thanks to which the loss of one eye does not mean that any part of the brain is completely excluded from visual data processing. After the intersection of the optic nerve bundles, LGN, lying on the same side as the eye, receives data from the external (temporal) part of the retina of the eye and data from the paranasal part of the second eye retina.

As a result of the division of optic nerve axons into two parts, LGN layers marked in Fig. 18 with numbers 1, 4 and 6 receive signals from the eye on the opposite side of the head and layers 2, 3 and 5 from the eye on the same side of the head as LGN. The same rule applies to both LGN structures on the right and left side of the head.

It is worth remembering that LGN performs the function of organising sensory data related to various features of the visual scene. Having familiarised ourselves with an almost chaotic tangle of dendrites and axons of numerous cells that make up the eye retina, from this point on it is much easier to find out which cords are the source data concerning colors, edges of things, their movement and spatial organisation. Moreover, their ordering allows to predict not only from which side of the body they come, but also from which part of the eye. Seeing is a major logistical undertaking for the brain and therefore good data organisation is the basis for success, i.e. creating an accurate representation of the visual scene.

Optic radiation

The last stage of the early pathway for visual data processing ends with the so-called optic radiation. Thanks to optic radiation, data on the distribution of light in the visual scene is delivered to the visual cortex in the occipital lobe, or more precisely to the so called calcarine sulcus, located on the inner side of the lobes. Radiation, in fact, is a band of cell axons, whose bodies form individual layers in LGN. It takes its original name from a specific system of fibres, which are distributed among tightly packed structures lying underneath the cerebral cortex in a large curve (Fig. 20).

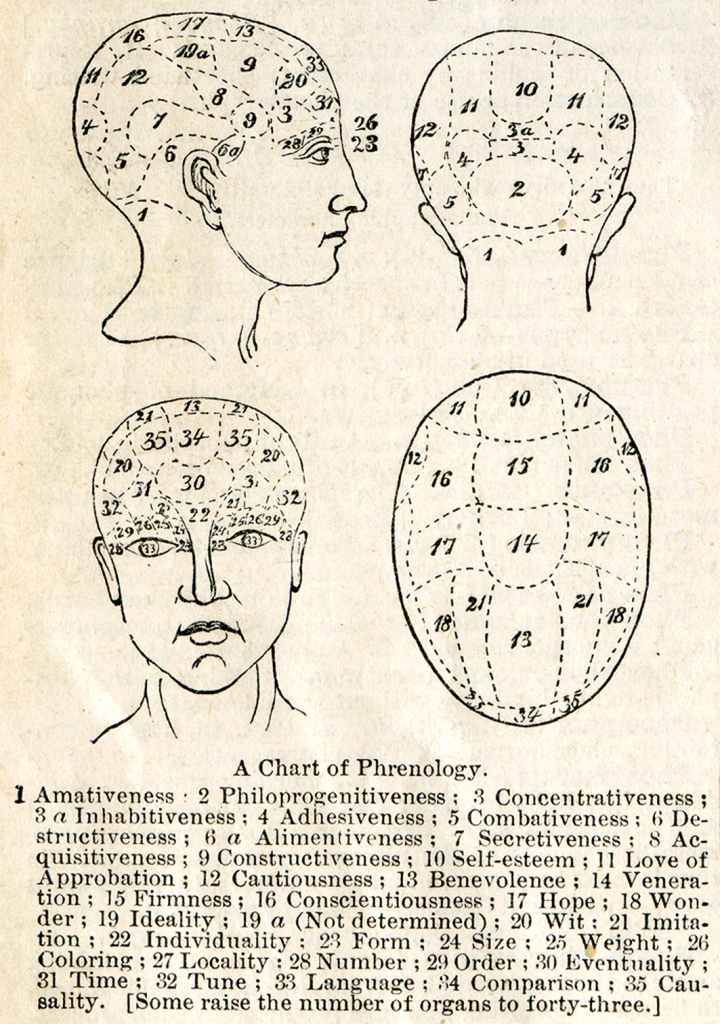

Phrenological point of view on the functions of the cerebral cortex in the process of seeing

Still at the end of the 19th century, phrenology was the dominant neuropsychological concept. In accordance with the assumptions of its creator, a German physicist Franz Josef Gall, there is a close link between the anatomical structure and the location of various structures of cerebral cortex, and the mental functions carried out by them, that is — the mind. Even though at present phrenology is regarded as pseudoscience, it was, in fact, an extraordinarily accurate intuition, which underlies the contemporary neuroscience (Fodor, 1983).

Due to the lack of appropriate diagnostic tools and methodology of neuropsychological studies, phrenologists quite freely associated psychological functions with various parts of cerebral cortex. With regard to vision, however, they had no doubts that it was conducted by the frontal, supra- and periorbital cortex. The whole part of the cortex was defined as perceptual and its different parts were assigned the functions of viewing: form (field 23), size (field 24), coloring (field 26), as well as weight (field 25). In the temporal areas of the orbital cavities they located functions related to perception of numbers (field 28) and order calculation (field 29), and in the post-periorbital cortex — language (field 33). In the frontal structures the imitation has also found its location (field 21) (Fig. 21).

Phrenologists did not even presume that seeing is a process which involves the structures of the brain furthermost from the cerebral cortex, namely the occipital love. They would rather attribute such functions as love, fertility, parental love and love for children in general (philoprogenitiveness, field 2), friendship and attachment adhesiveness, field 4), love for one’s home and homeland (inhabitativeness, field 3a) as well as ability to concentrate the attention, especially in intellectual tasks (concetrativeness, field 3).

Functions attributed by phrenologists to the temporal lobes, which — as we already know today — also play an important role in seeing. They would rather locate in them the basis for combativeness (field 5), destructiveness (field 6), and secretiveness (field 7).

The results of the studies in the field of neuroscience revealed completely different location of the structures responsible for various mental functions, particularly for vision, than in phrenology.

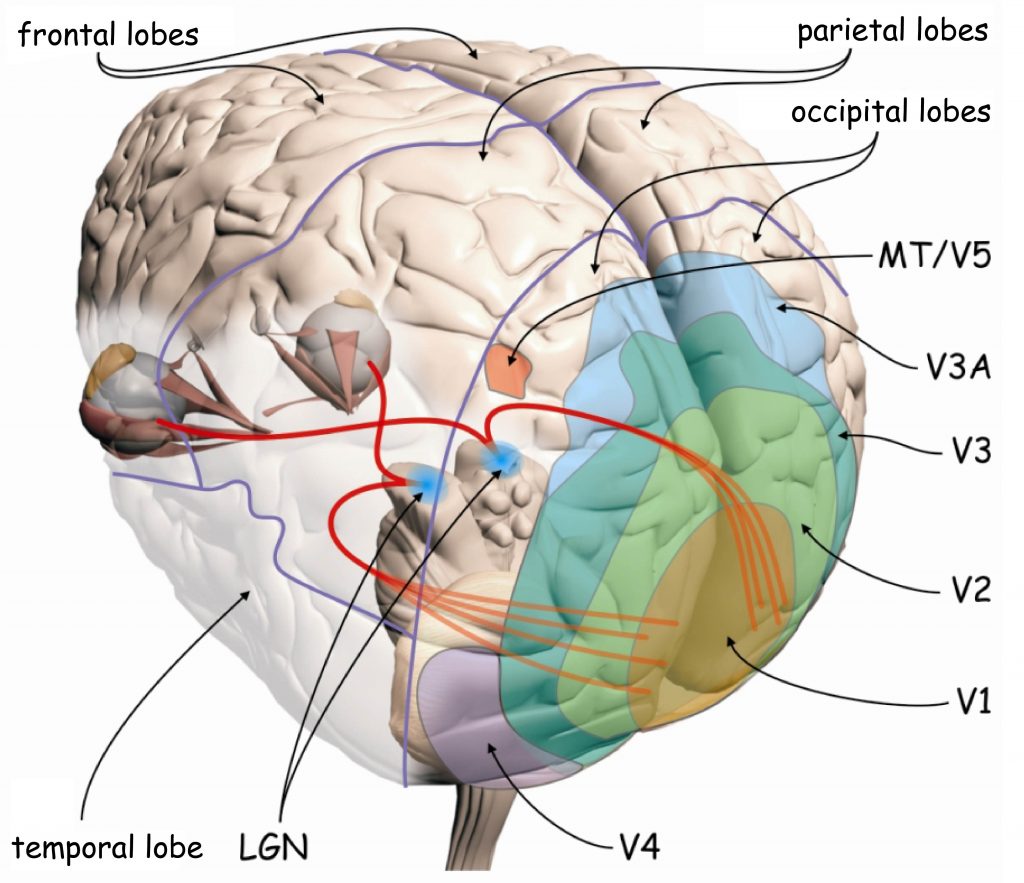

Visual cortex in neuroscience

Sensory data processing in the upper regions of the visual pathway is performed by approx. 4–6 billion neurons in the occipital, parietal, temporal and even frontal lobe. Overall, the data recorded by photoreceptors in the retina of the eyes involve about 20% of the total surface area of the human cerebral cortex (Wandell, Dumoulin and Brewer, 2009). Since occipital lobes play a particularly important role in the visual process, this area is also referred to as visual cortex (Fig. 22).

Due to its anatomical structure, visual cortex can be divided into two parts: striate cortex and extrastriate cortex. Striate cortex is located at the very end of the occipital lobe, in its medial part, in the area called the calcarine sulcus (Fig. 23 A). According to the classification of brain areas proposed in 1907 by the German neurologist, Korbinian Brodmann, this is area 17. Apart from the calcaline sulcus, it also involves a part of the outer occipital lobe (Fig. 23B). Area 17 is also referred to as primary visual cortex or V1 area (from the first letter of the English word vision and to emphasise that this is the first cortical stage of the visual pathway).

In both hemispheres of the brain, there are approx. 300 million neurons in the V1 area, which is 40 times more than the number of neurons in LGN (Wandell, 1995). Nerve impulses travel to V1 along the optic radiation, i.e. along the axons of the cells whose bodies are located in LGN.

The remaining parts of the visual cortex are referred to as extrastriate cortex. It basically involves Brodmann’s areas: 18 and 19, or in accordance with another notation, the areas: V2, V3, V3A, V4 and V5 (Fig. 22). Cortical structures found in all lobes of the brain are also involved in visual data analysis: in the parietal lobe, e.g. V7 or intraparietal sulcus (IPS), in the temporal lobe, e.g. inferior temporal cortex (ITC) or superior temporal sulcus (STS), and in the frontal lobe, e.g. the frontal eye field (FEF).

In order to understand what the brain does with the light stimulating photoreceptors in the retina of the eyes, it is necessary to analyse the structure and functions of all the cortical parts of the brain involved in vision.

Where do the stripes on the V1 cortical surface come from?

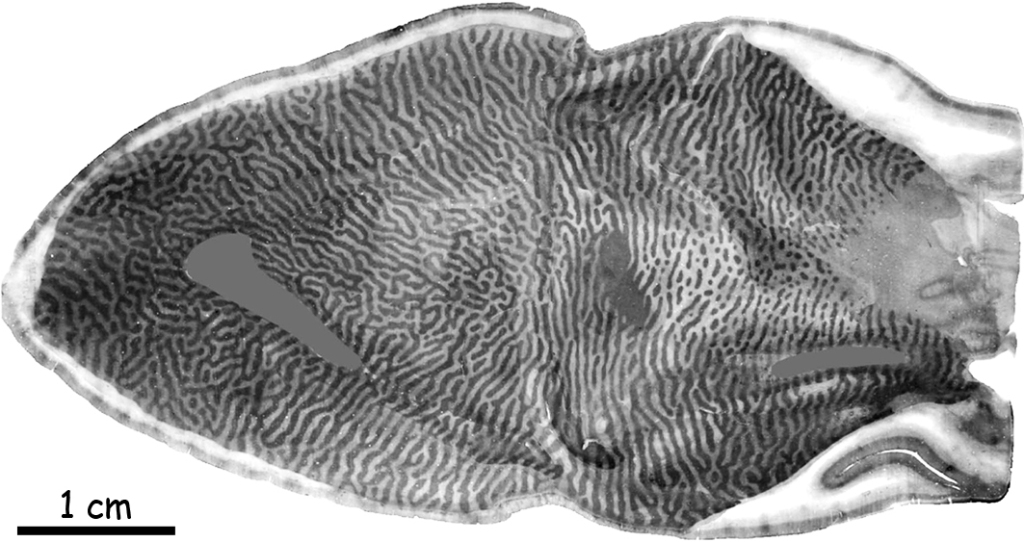

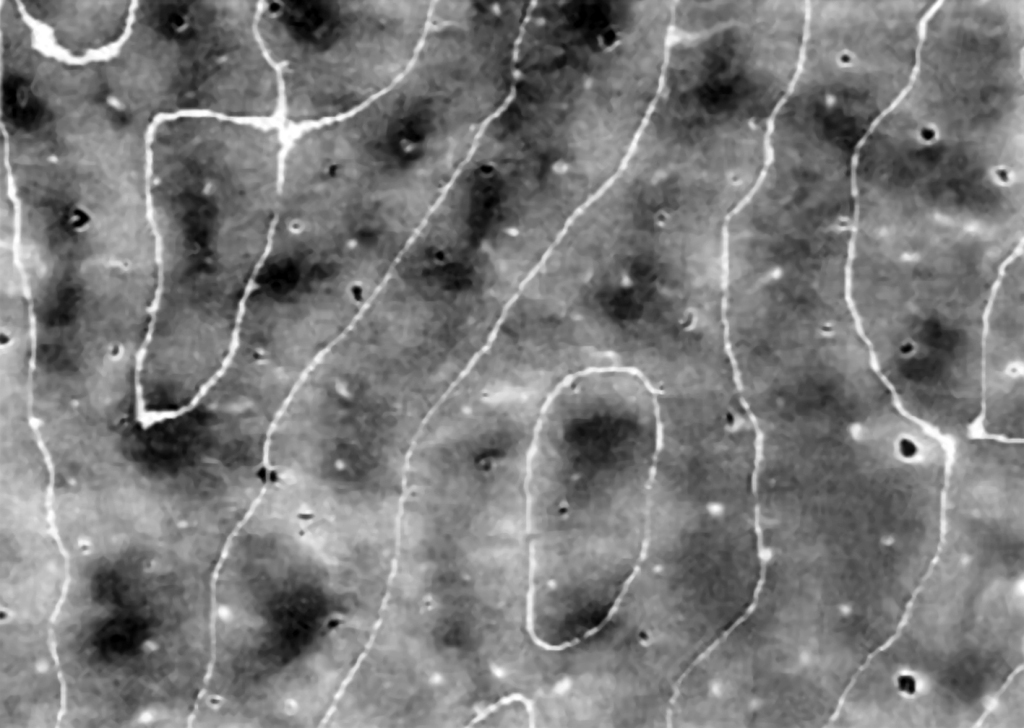

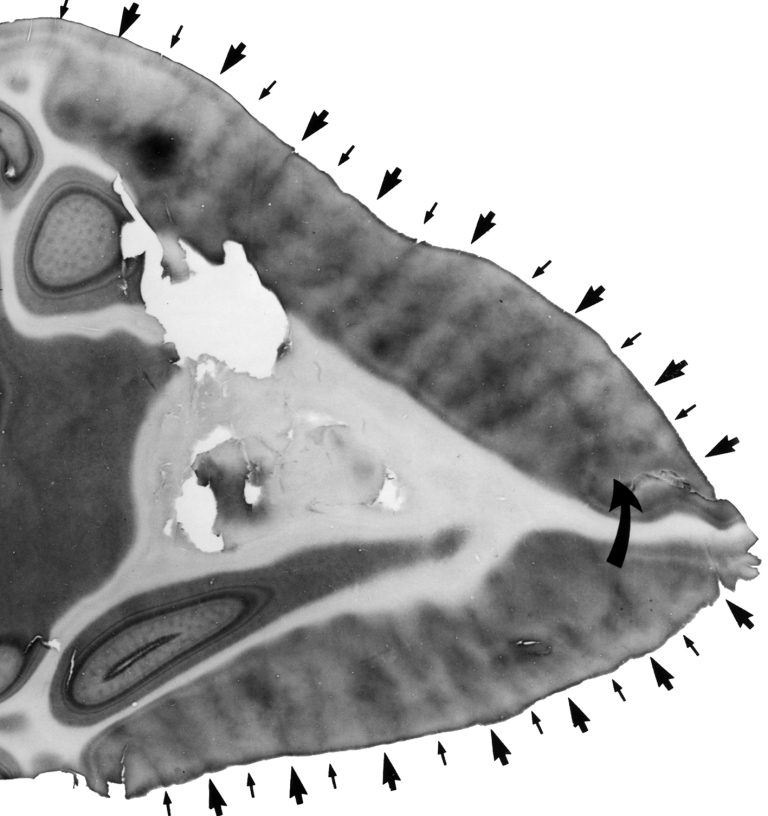

Striate cortex derives its name from the appearance of its surface, which resembles a bit of a zebra coloring (Fig. 24). Darker stripes visible on its surface were formed by cytochrome oxidase (COX) technique. They receive signals from the eye on the opposite side of the head than a given part of the V1 cortex area (LeVay, Hubel and Wiesel, 1975; Xinjich and Horton, 2002). As we remember, after the optic chiasm, the individual layers of cells in the right and left LGN receive signals from both the eye on the same and opposite side of the head. It is similar in the V1 cortex area. Nerve impulses from the eyes on both sides of the head travel to its both parts, i.e. from the right and left side of the brain.

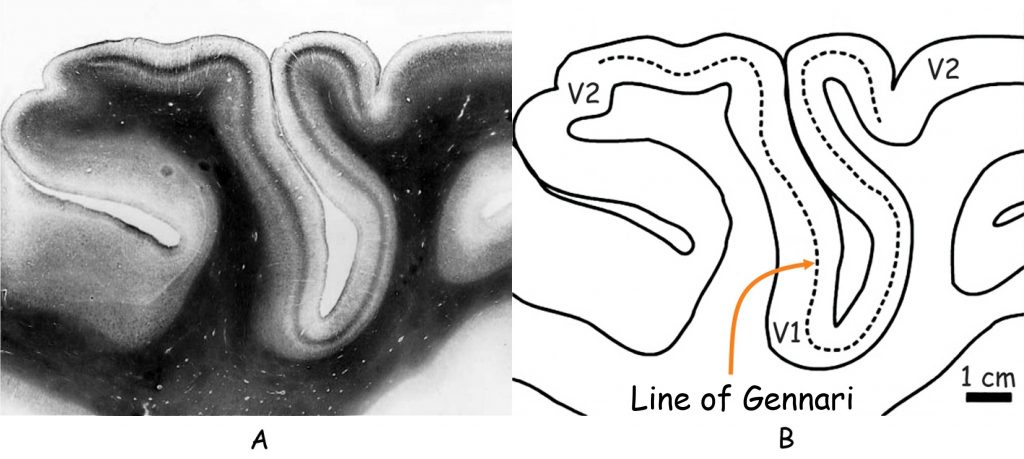

In order to understand the origin of the stripes on primary cortical surface, it is necessary to look into its inside by cutting it crosswise (Fig. 25). At first glance, there are only three stripes on the cross-section of the visual cortex, two slightly lighter and one darker in between. The darker one is called the stria of Gennari, named after its discoverer, Italian medical student Francesco Gennari. No other details of the internal structure of the primary visual cortex can be seen without the microscope.

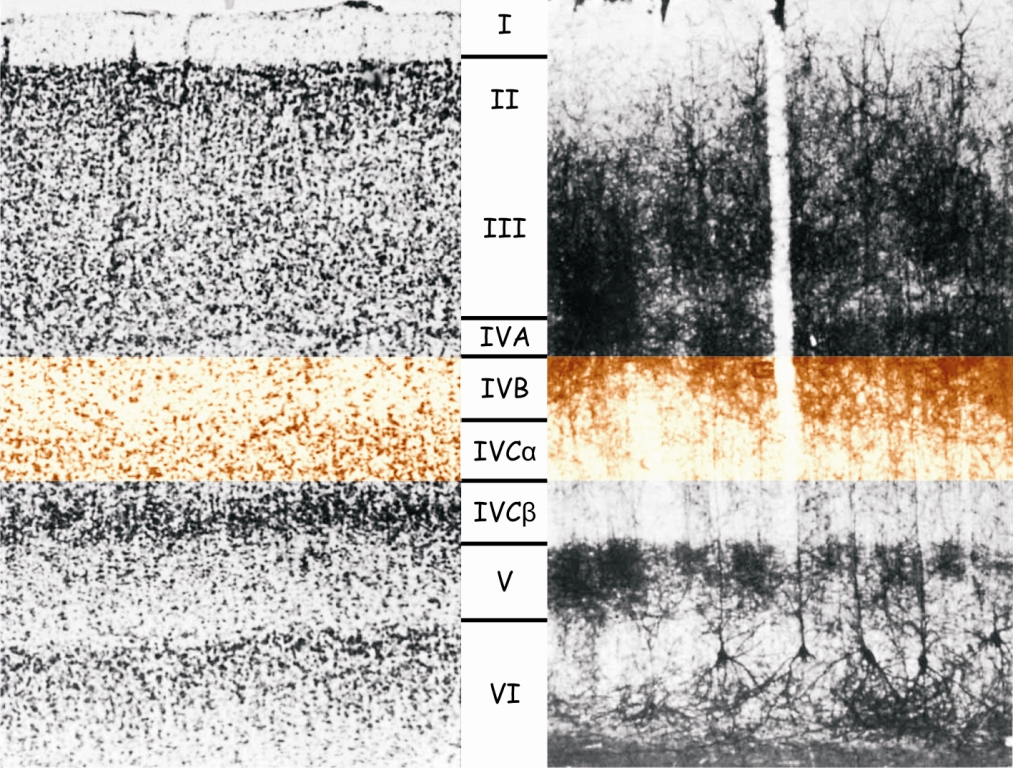

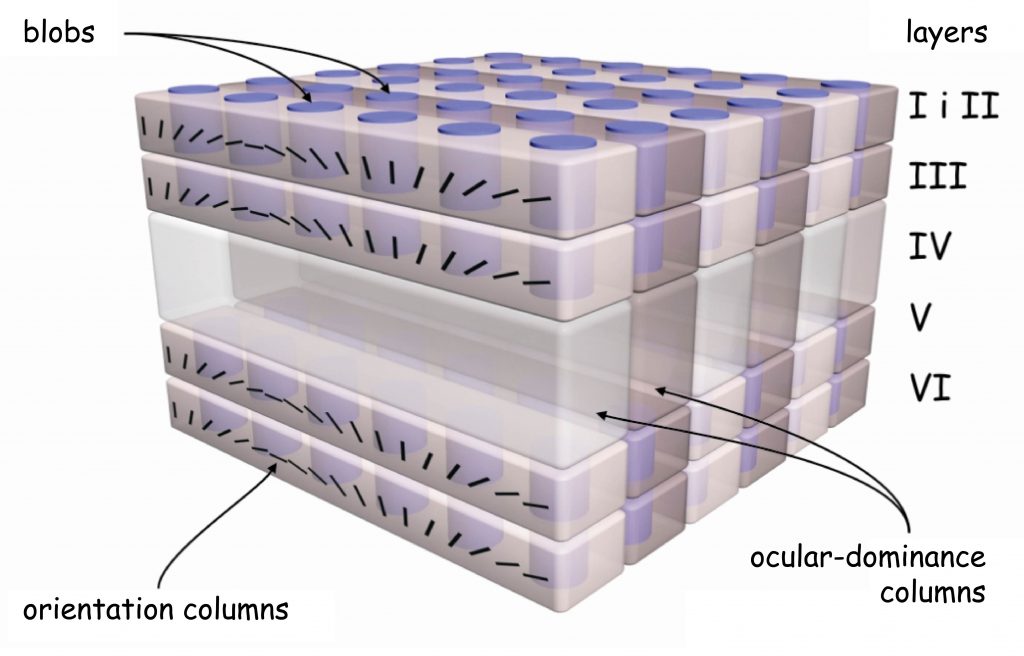

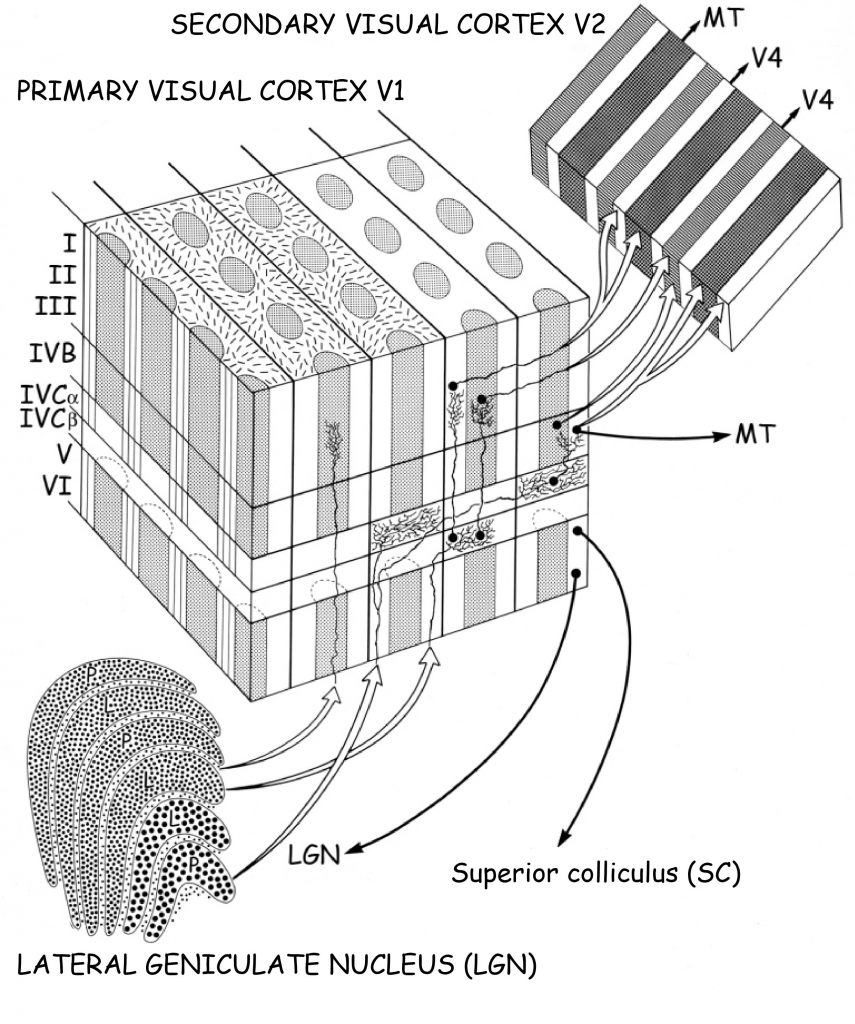

However, if we look at it under the microscope — and especially after the previous staining of the cells located there — then we will see something that resembles an orderly structure of layers in LGN. The cells in V1 cortex area are arranged in 6 horizontal layers, marked with Roman numerals, from I to VI. The widest layer is layer IV, which is divided into four thinner layers: IVA, IVB, IVCα and IVCβ (Fig. 26).

The dark stripe of Gennari, visible in Fig. 25, is a part of layer IV, or more precisely the area where the connections between layers IVB and IVCα are located. Layer IV serves as an entrance gate to the visual cortex of the brain. It is through it, and especially through the IVCα and IVCβ layers, that nerve impulses from LGN, and earlier from the retina of both eyes, reach the primary visual cortex by means of the optic radiation. Sensory data is sent to cells in other layers of the V1 cortex area only from layer IV.

Islamic architecture of V1 cortex

Another curiosity related to the structure of the primary visual cortex is that the cells that make up its individual layers are joined together to form clear columns. They are well visible in the image taken with the Golgi method in Fig. 26. Dendritic trees of the cells in layer I are like the column heads, the base of which are the cells in layer VI, and the stem — the cells in layers II to V.

Looking at the striped surface of primary cortex in Fig. 24, we can only see the heads of the individual columns. However, if the columns of cells that receive nerve impulses from only one eye are stained, then all the heads of these columns will also assume this color, and on the cortical surface we will see darker stripes (Fig. 27). They indicate where in the visual cortex there are rows of cells that receive and process signals from the right or left eye. They create some kind of colonnades, such as the ones we can admire in the Great Mosque of Cordoba. Right next to one colonnade there is another row of neuronal columns, receiving signals from the second eye, and so on.

Cell orientation

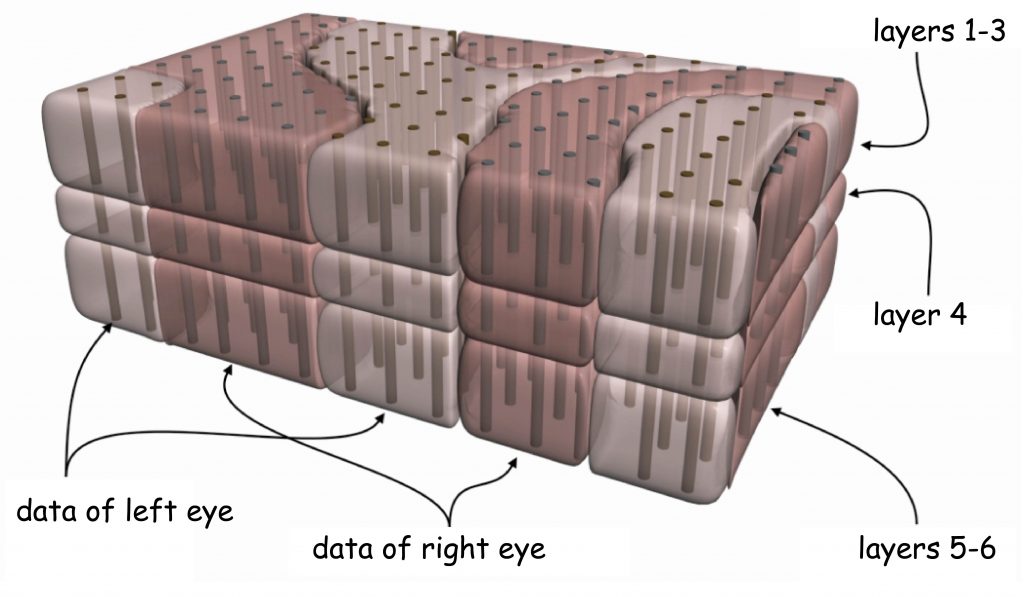

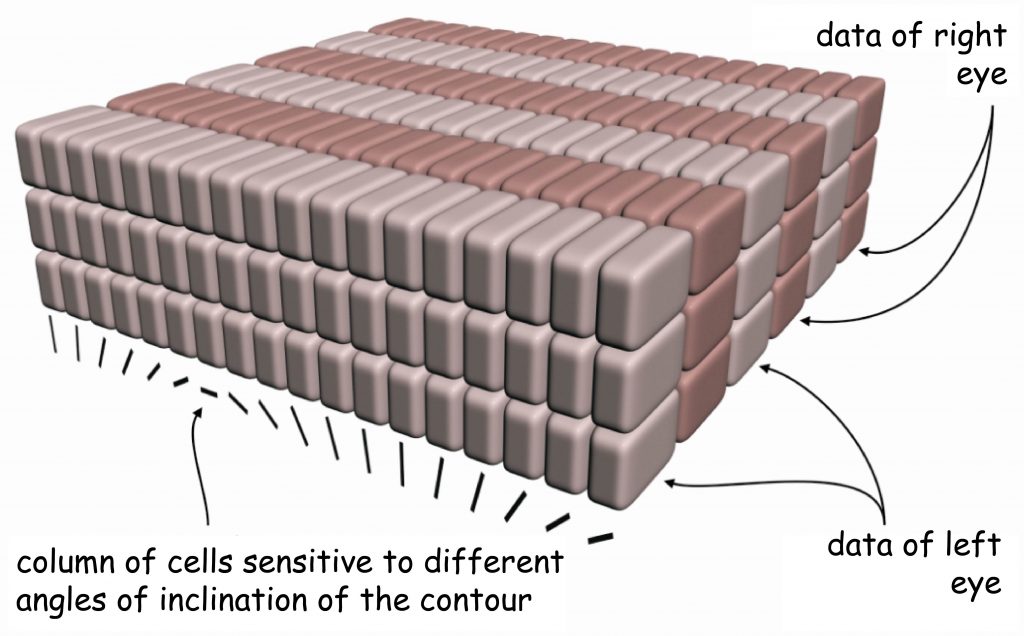

The discovery of the next property of the primary visual cortex ended with the award of the Nobel Prize in 1981 to two neurophysiologists David Hubel and Torsten Wiesel. While experimenting with feline visual cortex they found that columns of cells, which react to the data incoming from one eye, standing beside one another, are specialised in terms of spatial orientation of the fragments of objects’ edges recorded by the eye. It means that within cortex V1, cell columns are ordered in two planes.

One plane is defined by the cells reacting independently from one another to data incoming from the right and left eye. In turn, the other plane is located across the first one and comprises cells which react to various angles, at which the edge fragment of a given object is seen, regardless of which eye recorded it. Describing this astonishing structure of the primary visual cortex, Hubel and Wiesel (1972) proposed a functional model of cell column arrangement in V1, known as the ice-cube model (Fig. 28).

Of course, this model should not be treated literally, if only because — as we saw when looking at the V1 cortical surface — rows of neuron columns reacting to signals coming from one or the second eye are not arranged in straight lines. Nevertheless, this model illustrates perfectly the general principle of functional ordering of the internal structure of the primary visual cortex.

It seems that already at the initial stage of the visual pathway, the image projected onto the retina of the eye is spatially divided into thousands of small pieces. Each of these pieces contains information about its location on the retina and about the orientation of the edges of the adjacent surfaces differing in light intensity. In the primary visual cortex, the content of all these pieces is analysed and, depending on the retina site and the orientation of the registered edges, a specific group of cell columns is activated. This is probably how the information on shapes of things is being encoded. In the further stages of the visual pathway it is used to reconstruct the objects forming the entire visual scene.

At first glance it may seem completely irrational that, when a complete image of a visual scene is obtained on the retina, the visual system first decomposes, and then reassembles it. On closer examination, however, we must conclude that it would be difficult to invent a more economic system for visual data processing. What needs to be done is to consider the unimaginable amount of data provided to the brain by stimulated cones and rods every second when our eyes are open. In other words, the complexity and variability of visual scenes over time require from the visual system a system approach based on a number of clear principles. They come down to the analysis of visual data due to the most important properties of both the visual scene and the device that registers them, i.e. the retina.

If countless shapes of all things seen can be determined by means of the differences in the brightness of two-dimensional planes on which the edge of the shape is outlined, it is incomparably easier to encode these shapes using a relatively small number of cells sensitive to edge orientation within the range from 0 to 180°, than by means of an impossible to estimate number of cells and their connections, which would remember each shape with all its appearances. Although this option is “seriously considered” by the brain, also for economic reasons.

It transpires that even single cells can encode shapes of complex things, but only those that are very well known and strongly fixed in brain structures. Obviously, not in cortex V1, only at further stages of the cortical pathway of vision. They are the so-called grandmother cells, which react selectively to particular objects or people (Gross, 2002; Kreiman, Koch and Fried, 2000; Quiroga, Kraskov, Koch and Fried (2009); Quiroga, Mukamel, Isham, Malach et al. (2008). At this point, it is worth reminding that the possibility of encoding complex shapes by single cells was discovered and described already in the 60s by an eminent Polish neurophysiologist, Jerzy Konorski (1967). He called these cells gnostic neurons. In accordance with his concept, they constituted the highest level of processing the data on the shapes of the seen objects, recorded by the gnostic fields, composed of many cells at the earlier stages of the visual pathway.

Returning to the equally grand discoveries of Hubel and Wiesel it is worth adding that they identified not one, but two types of column-forming cells in cortex V1. Apart from columns of cells sensitive to the angle of inclination of edges, the so-called simple cells, they also found presence of cells, which react to the direction of movement. They are the so-called complex cells. The movement of objects in the visual scene will not be analysed within this monograph, therefore I will not focus on this thread here.

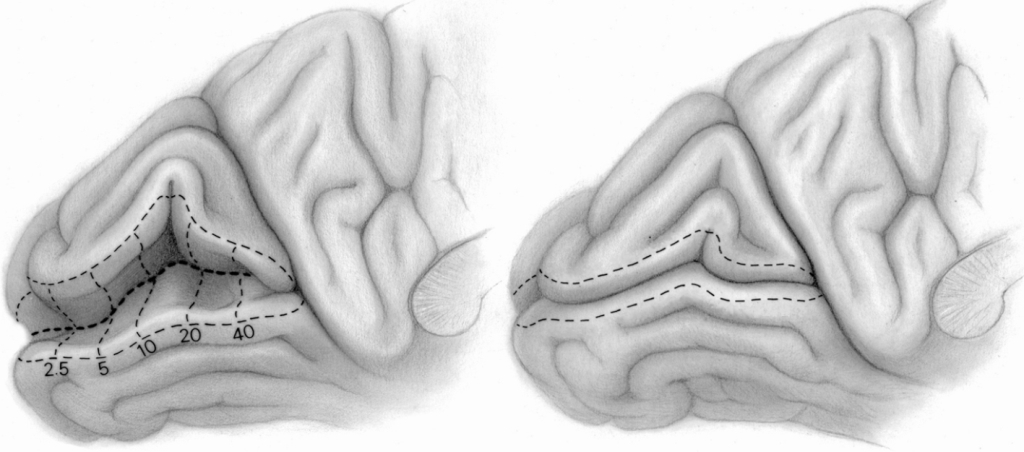

Retinotopic map in the striate cortex

The description of the extraordinary properties of the primary visual cortex presented in the previous chapter is far from complete. Knowing already the internal structure of the V1 cortex, let us return again to its surface. And here is where the next surprise is awaiting us.

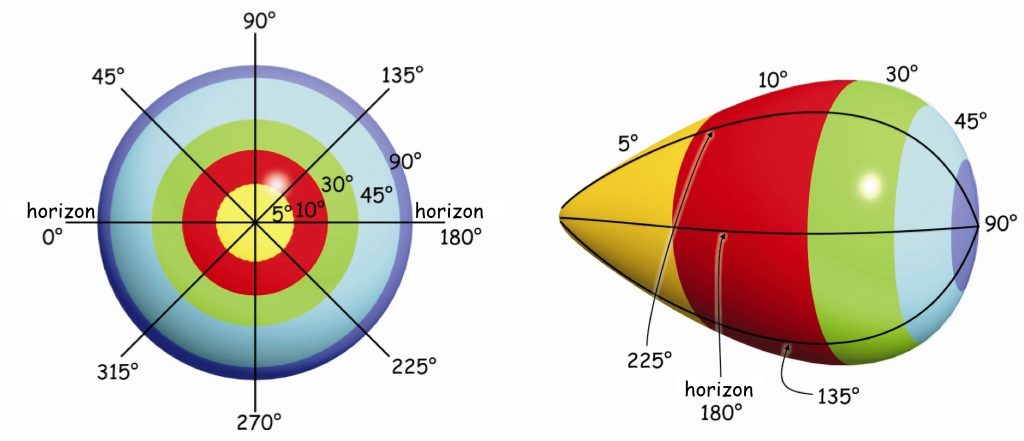

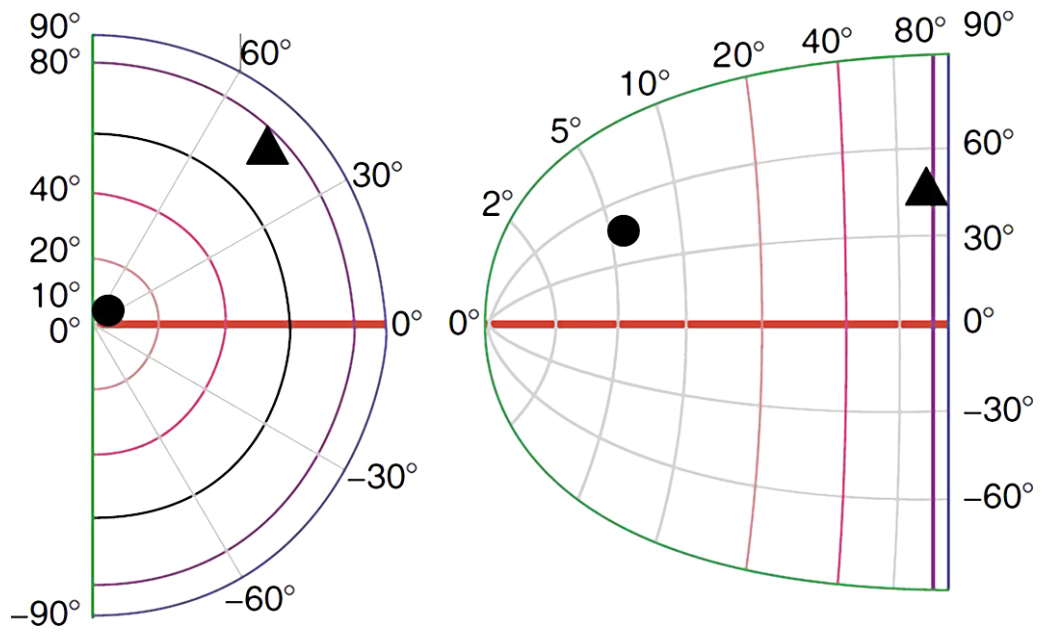

The rows of cell columns that receive signals from the retina of the right and left eye reflect the order of the photoreceptors within them. This is not a 1:1 representation, but an algorithm is known to predict which neuron columns in the primary visual cortex will respond to a specific part of the retina in response to stimulation. The complex logarithm transformation was developed by Eric L. Schwartz (1980) on the basis of the anatomical location of connections between ganglion cells that collect information from different parts of the retina and different parts of the V1 cortex in the right and left occipital lobe (Fig. 29).

Figure 29 shows that approximately 30% of neuronal columns in the primary visual cortex receive data from the macula area, which covers a field of vision of up to 5° (yellow color). The next, third part of the neuronal columns in V1 is responsible for the area of the retina between 5° and 20° of the field of vision (red color) and, finally, the rest of the cortical neurons in the area 17 (green, light and dark blue colors) covers the remaining area of the retina, i.e. between 20° and 90° of the field of vision.

Since, as we remember, most of the data travels along the axons of the midget ganglion cells and bistratified ganglion cells, so the so-called parvocellular pathway, the most columns of cells receive these data in the V1 cortex . If we remember that tiny ganglion cells support mainly the areas of the retina that are located in the fovea, then it will be easy to understand that the number of columns of cells involved in this type of data is disproportionately higher than the number of columns receiving data from other parts of the retina.

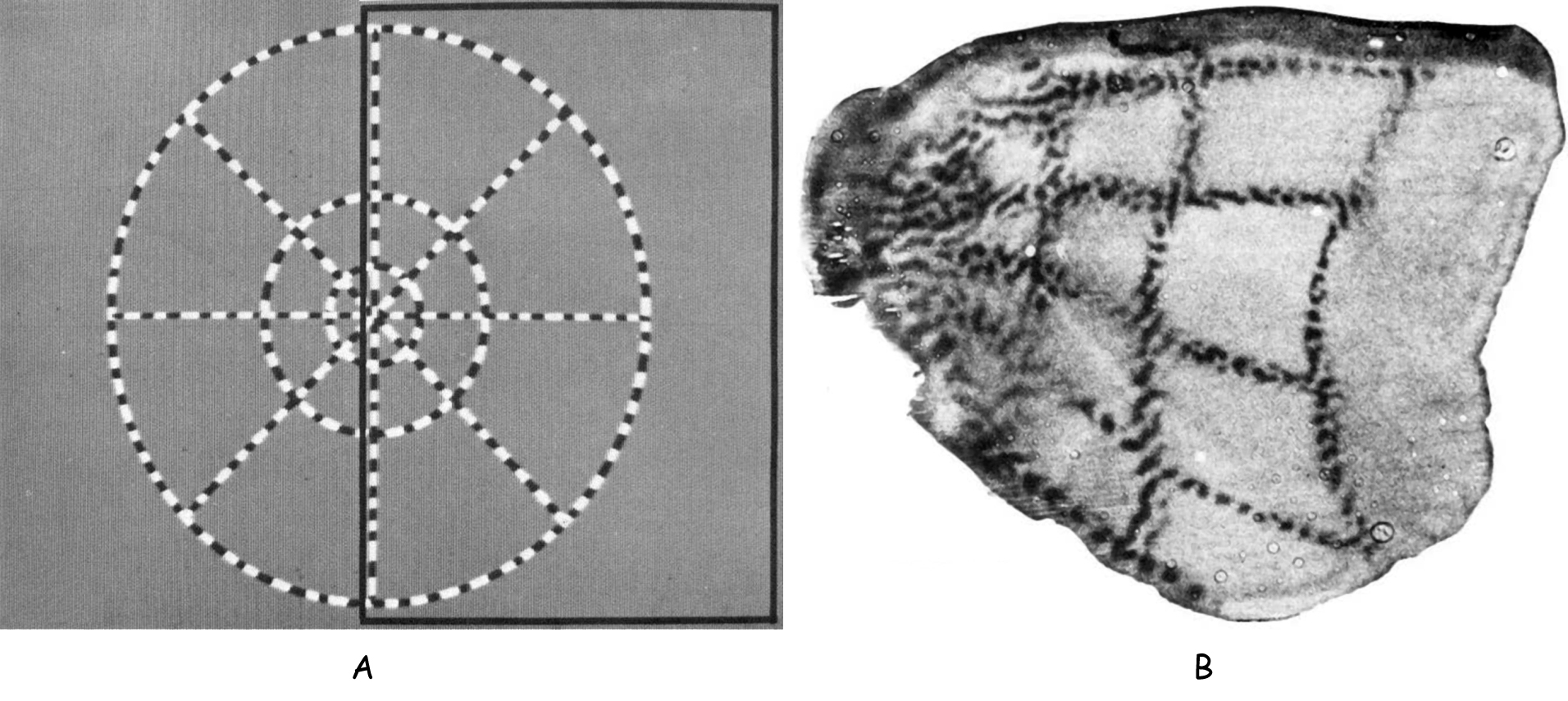

One of the first studies to record the visual stimulus reactions of cells in the V1 cortex area was conducted by Roger Tootell and his associates on macaques (Tootell, Silverman, Switkes and de Valois, 1982). They demonstrated the effect of reflecting a pattern of a figure seen by a monkey in the V1 cortex area (Fig. 30). It is worth noting that the clearest representation was obtained in the IVC layer. This is understandable as it is at this level that signals from LGN reach V1.

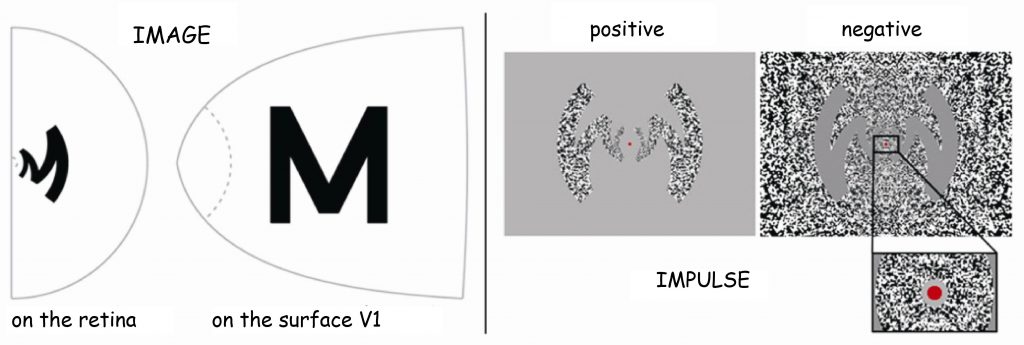

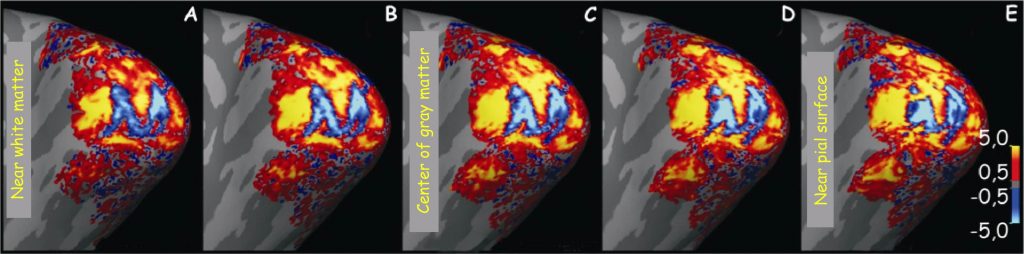

The second example illustrates even more clearly how much we already now about the retinotopic organisation of cortex V1 — not only in macaques, but also in humans — and its activity during seeing. In the studies, to which I would like to draw attention to — the reaction of human visual cortex was recorded using the fMRI 7T scanner (Polimeni, Fischl, Greve and Wald, 2010). This time, the studied person viewed two versions of the letter “M”, positive and negative, on a computer screen (Fig. 31B). Its form was adequately processed using the above-mentioned Schwartz algorithm, which determines the spatial relationships between the image projected onto visual hemifield and its reflection on the surface of the cortical surface (Fig. 31 A).

By exposing the distorted visual stimulus, it was intended to check to what extent neuronal columns, which are sensitive to different angles of edges formed at the contact point of contrasting surfaces, would accurately reflect the form of the letter “M”. The activity of neurons in the V1 cortex area was measured during the visual fixation by the respondents on the middle red spot and it was found that at the IVC layer level the image of the letter “M” was fairly well reflected (Fig. 32 C; blue shape).

I believe we do not have to convince anyone what huge opportunities arise from these discoveries in terms of restoring vision in people who lost it as a result of an illness or a mechanical damage to the eye retinas. Instead of the data sent by the photoreceptors located in the retinas of the eye to V1 cortex, signal can be sent to them directly from the video camera. After processing it using the Schwartz algorithm and stimulating cells in the primary visual cortex with electrodes, a visual experience can be created. There is a lot of evidence indicating that it soon will be possible.

Some more information about blobs and colors

While discussing the structure and the functions of the primary visual cortex, we cannot omit one other element. Between cell columns, which separately receive information on the spatial orientation of edge fragments from both eyes, there are small cylindrical clusters of neurons playing an important role: among other things, in color perception (Livingstone and Hubel, 1984). They can be found in basically all layers of the visual cortex, except for layer IV (Fig. 33).

In the Polish translation of the English name “blobs”, they are referred to as “kropelki” [“droplets”], “plamki” [“spots”], and even “kleksy” [“blotches”] (Matthews, 2000), while the original name of this structure, discovered by Jonathan C. Horton and David Hubel (1981) i.e. “łaty” [“patches”] is used less frequently. These names derive from the fact that after dissecting V1 cortex longitudinally and staining it using the cytochrome oxidase method slightly irregularly distributed blobs between the cell columns, reacting to the orientation of the edges of the seen objects, can be observed on its surface. 34).

Inputs and outputs of V1 cortex

Wrapping up the journey into the primary visual cortex, it is worth ordering the knowledge regarding its connections with other structures of the brain. It is, first and foremost known, that most data from the LGN reach V1 cortex via a wide tract, also known as optic radiation. The axons of cells forming optic radiation are connected with the cells of V1 cortex at the level of layer VI. These axons form three visual pathways: magnocellular, parvocellular and koniocellular.

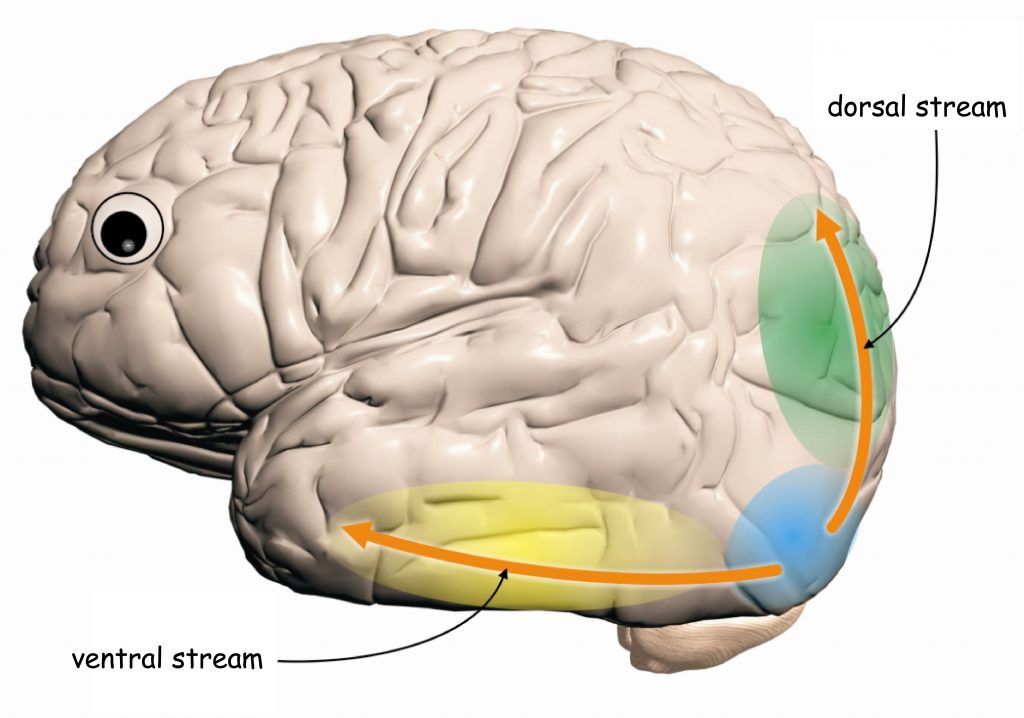

The magnocellular pathway is connected with V1 cortex at the level of IVCα layer, and it conveys the data concerning brightness of visual scene fragments. They are not encoded with resolution as high as in the case of the data flowing through the parvocellular pathway, but they encompass much larger areas of the watched scene. These data constitute a basis for global, spatial organisation of objects located in a framed scene. Moreover, the magnocellular pathway conveys signals which constitute a basis of seeing movement. Both the perception of global spatial organisation within a visual scene and the perception of its variability are essential for out orientation in space. The data coming from the IVCα layer through the magnocellular pathway reach layer IVB, neighbouring it. From that area, they will be sent in two directions: to V2 cortex and V5 cortex, also known as the medial temporal area (MT), which specialises in processing data concerning movement in a visual scene (Fig. 35).

The axon-rich parvocellular pathway connects with V1 at the level of layer IVCβ. It conveys two types of information: on the length and intensity of the light wave stimulating various groups of cones in the retina, namely on the color and luminance. These data are on the one hand characterised by high spatial resolution, and on the other they are limited to a relatively small portion of the field of vision. The information on the length of the light wave, particularly in terms of the opposition of the red and green color, is sent from layer IVCβ to clusters of neurons forming blobs, and from there — to V2 cortex. On the other hand, the information on the intensity of the lighting, which constitutes an important basis for seeing edges, flows from layer IVCβ, along the columns sensitive to specific angles of edge fragments (especially in layers II and III) and is sent to V2 cortex from this place as well.

It is somewhat different in the case of the koniocellular pathway, which connects directly with clusters of neurons forming cylindrical blobs in V1 cortex, without the intermediation of columns in layer IV. Data concerning the color blue and yellow are transmitted through the koniocellular pathway. From the blobs, they flow further towards V2 cortex.

V2 cortex

A vast majority of the data reaching V1 cortex from the retina through the LGN is forwarded to V2 cortex or, according to Brodmann classification — field 18. Just like V1, it consists of neighbouring cellular structures of various anatomical structures. After being stained they can be easily told apart as they form characteristic stripes (Fig. 36). We can basically distinguish three types of stripes in V2 cortex: thin stripes, thick stripes, which are stained dark, and the so-called pale stripes, which remain bright during staining (Matthews, 2000) The stripes lay in the same pattern along the entire V2 cortex, that is: thin stripes — pale stripes — thick stripes — pale stripes — thin stripes — pale stripes and so on.

Moreover, a half of the cells in V2 cortex reacts almost identically to the cells in V1 (Willmore, Prenger and Gallant, 2010). It most probably means that they process data concerning simple features of a visual scene, such as parts of edges, their orientation, the direction of movement and colors (Sit and Miikkulainen, 2009). These cells, however, are not as well organised in terms of topography as those in V1 cortex. The other half of V2 cells is responsible for more complex features of visual scenes. They are, among other things, active in response to illusory contours or contours defined by the texture of the surface (von der Heydt, Peterhans and Baumgartner, 1984; von der Heydt and Peterhans, 1989) as well as complicated shapes and their orientation (Hegdé and van Essen, 2000; Ito and Komatsu, 2004; Anzai, Peng and van Essen, 2007).

Each type of stripes receives different data from V1, in accordance with the breakdown of the visual pathways into three categories on the basis of their functions, which has been already mentioned several times. Thus, data from the blob areas in V1 are forwarded to the cells forming thin stripes in V2. In these stripes, the anatomical differences between the cells forming koniocellular and parvocellular pathways are fading out, and integration of all data which constitute the basis for seeing colors occurs. In other words, neurons forming the thin stripe in V2 cortex are specialised organising data with various lengths of the electromagnetic wave, which creates the entire spectrum of the seen world. These data are then forwarded both directly and via V3 cortex to V4 cortex, also known as the cortical color centre (DeYoe and van Essen, 1985; Shipp and Zeki, 1985; van Essen, 2004). On the basis of fMRI examination results Derrik E. Asher and Alyssa A. Brewer (2009) suggest that in terms of color processing in V4 field, there are significant differences between the hemispheres. It turns out that in the cortical color centre on the right, the neurons are much more sensitive to chromatic stimuli than to achromatic stimuli, and in the V4 field, located on the left side, no differences in the response of the neurons to chromatic and achromatic stimuli was found.

As we remember, high-resolution vision of shapes based on contrasts in the luminance range has its source in the data transmitted via the parvocellular pathway, which, from the colonnades in V1, reach the pale stripes in V2. It is one of the most important structures in the visual cortex, the activity of which constitutes the basis for organisation of data enabling recognition of object shapes in visual scenes. Data from pale stripes in V2 are also sent to V4.

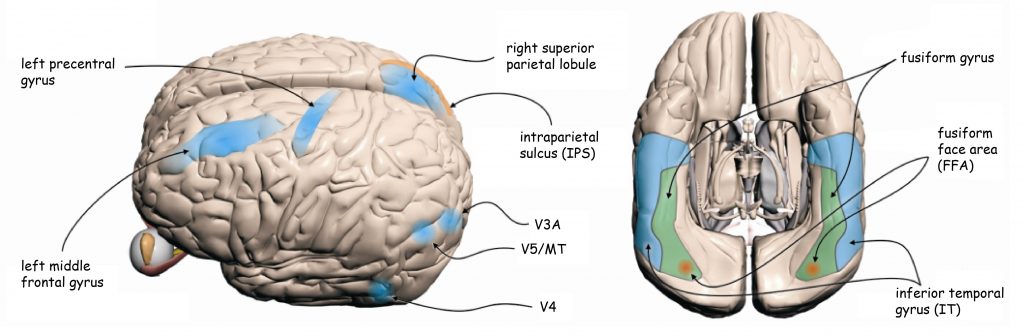

Brain structures which receive projections from V4, namely the inferior temporal gyrus (IT) and the fusiform gyrus with a particular area known as the area of face recognition fusiform face area (FFA) are responsible for integrating the data concerning shapes of the objects seen (Fig. 37).

The third type of stripes in V2 cortex are dark and thick stripes. Two categories of data from V1 reach this area: data on the spatial organisation of a visual scene and movement. Data on global spatial organisation enable both verification of the presence of certain objects in visual scenes (thus, they also constitute the basis for seeing their shapes) as well as their distribution in relation to each other. Due to relatively low resolution, these date perform the function of general orientation in space and — depending on the situation — they constitute an impulse to focus attention and perform more detailed analysis of selected parts of a given scene by systems responsible for high-resolution seeing. Signals coding information concerning the global spatial orientation are forwarded to V3 cortex.

On the other hand, the second category of data flowing from V1 to the thick, dark stripes in V2 constitutes the basis for seeing movement. From there they are led to the aforementioned MT area, namely V5 cortex and further V3A, which constitute the cortex centre of movement perception (Roe and Ts’o, 1995; Shipp and Zeki, 1989) (Fig. 37).

Ventral and dorsal paths

Apart from integrating the data incoming from V1, V2 cortex also constitutes an extremely important node in the visual pathway, in which two new, partially independent of one another, visual pathways begin: the ventral path and the dorsal path (Milner and Goodale, 2008). Their names also derive from their location in the cerebral cortex. If we imagine the brain as an animal, for example a fish, then the structures located at its top surface, namely the parietal lobes, would resemble the dorsum, whereas the structures located at the bottom (for example the temporal lobes) — with the abdomen (Fig. 38). Hence the names of the paths. Just like the subcortical koniocellular, parvocellular and magnocellular pathways, located at the earlier stages of the visual pathway, also both cortical paths differ from one another in terms of their anatomy and function.